At first glance, it is a straightforward request: measuring two voltages representing the cartesian corrdinates of a point, lets call the voltages Ux and Uy, and calculating the polar representation of it, the square root of the sum of squares. In fact, the later part is already irrelevant for the current topic, the only important fact is that this calculation needs to be done in a way that if Ux=13.000000 and Uy=5.000000, resulting in R=sqrt(12^2+5^2)=13.000000, is fully symmetrical for Ux and Uy, i.e., the same result must result if Ux=5.000000 and Uy=13.000000.

This can only be achieved if for both ADCs, the quantizer transfer function is perfectly identical. Simply speaking, for every voltage level applied, the resulting digital code needs to be the same, for both of the ADCs, X and Y.

A simple solution would be to use the same ADC, for both channels. However, this will increase random noise, because the integration time is only half of the available signal time. And, it is not applicable in the given case, because Ux and Uy change slowly but perfectly unpredictably over time.

Therefore, there seems to be no other way than measuring both values simultaneously, over well defined and synchronized time intervals, and then doing whatever calculation is required.

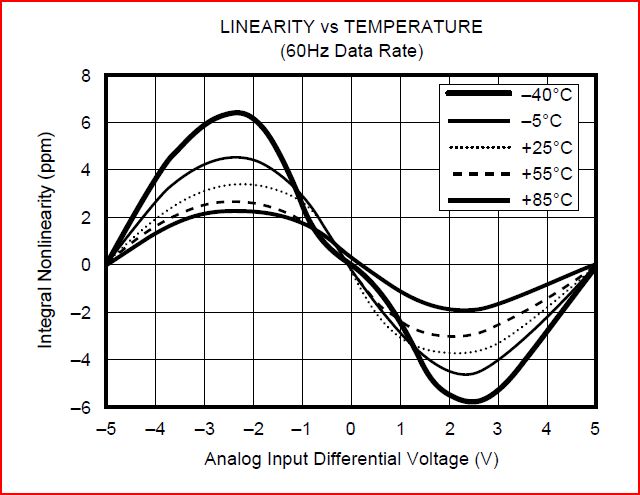

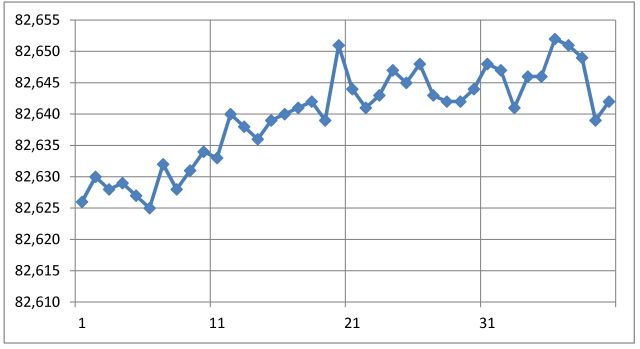

After spending 2 hours calculating, I can say, we need about 1 ppm linearity to make this work as desired, better, adding in some noise and unpredictable drift and error sources, 0.2-0.5 ppm linearity.

Also, I realized that a bit of noise would be acceptable, as long as it is coherent, and as long as it is affecting both channels in the same way. So cables and layout will be routed for as much symmetry as possible, and thermally coupled as much as possible.

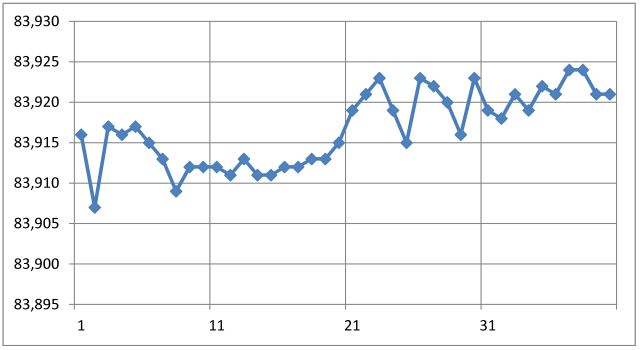

How can this be achieved? First attempt, even before I learned about this issue, was to use the best ADCs at hand, two Prema 5017 7.5 digit multimeters, but even these left something to be desired (4 ppm linearity deviation per channel, but seems to be a bit better than spec).

My first suggestion was to switch from the Prema intruments, to two HPAKeysight 3458A Digital Multimeters, these reach about 0.05-0.1 ppm linearity, in the 10 V range. Impressive, but 24 kg of instruments, worth about USD 20k. Not an option, except for proof of concept, which has been achieved using the Prema devices.

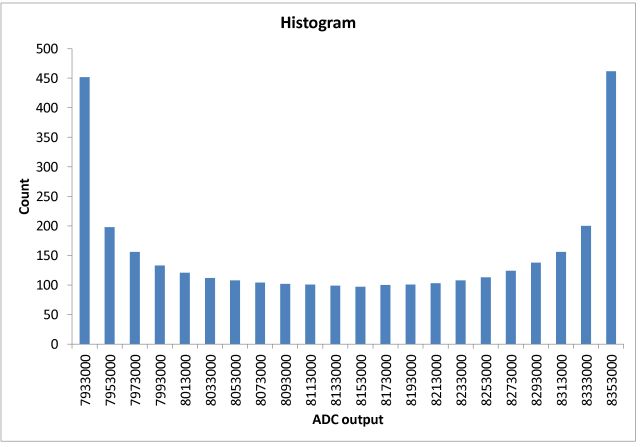

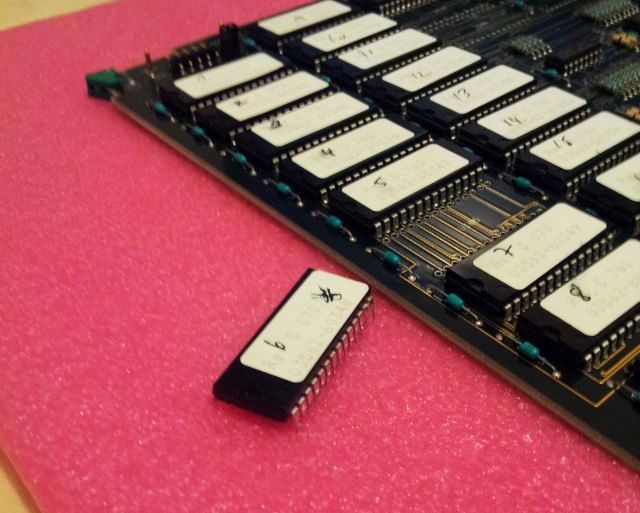

Now, what kind of intricate sampling scheme could be used to make this work, with off-the-shelf parts. Well, first we need a good starting point, a linear ADC. Looking through the various datasheets, let’s discuss a particular part, with impressive performance ratings, the AD7710. This is a 24 bit sigma-delta converter, and has +-15 ppm nonlinearity. So we need to find means to improve this by a factor of, say, 30 to 50. Seems to be viable, with some error correction meachanism. Also, we don’t want the error correction to cause any dead time of the system (i.e., there can’t be an autocalibration feature taking significant time, leaving gaps in the signal measurement).

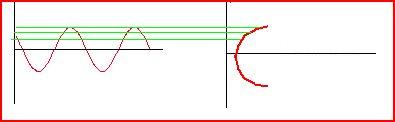

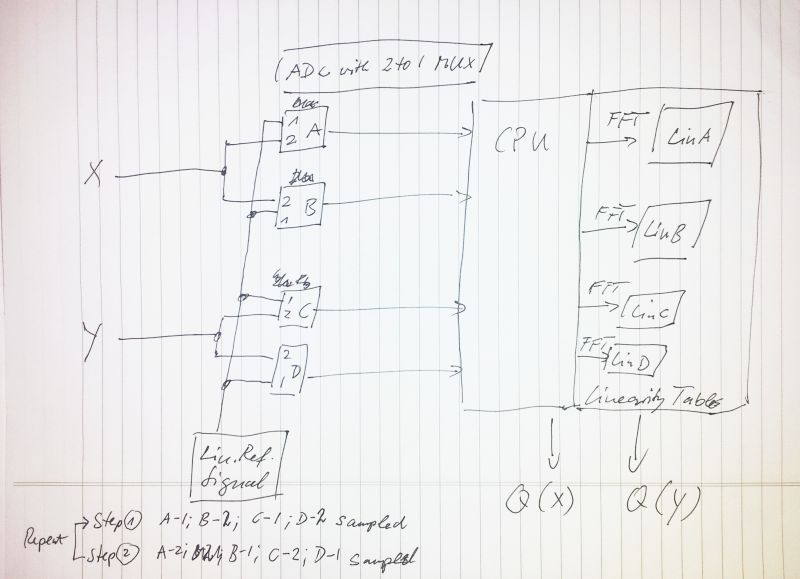

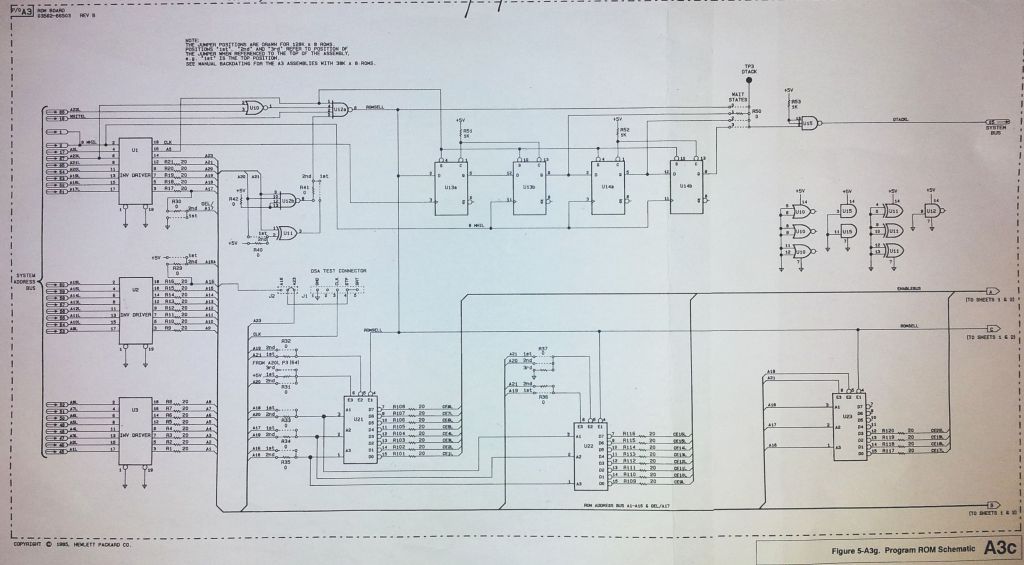

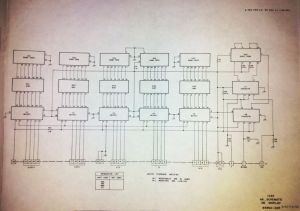

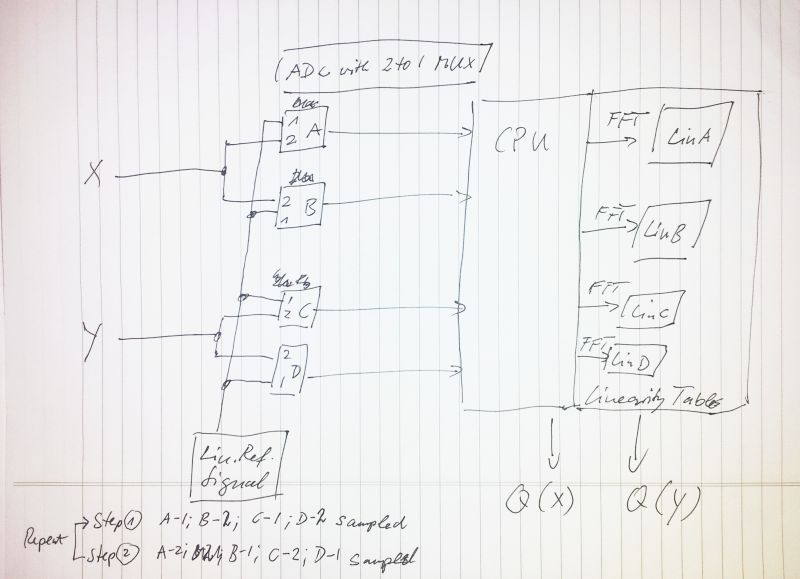

So, here is the proposed scheme:

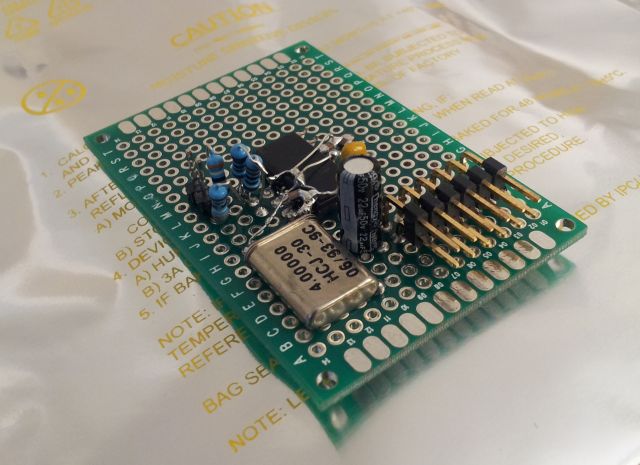

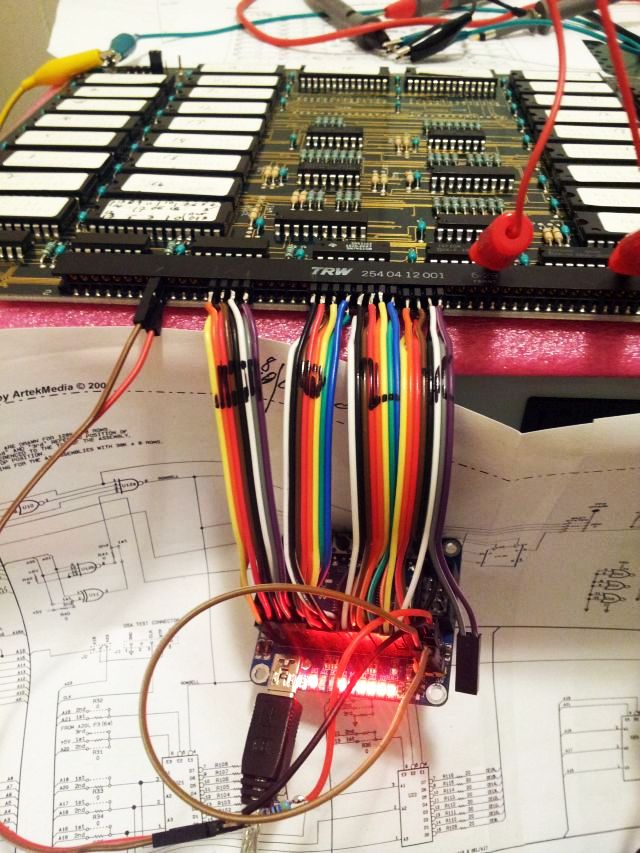

It’s a bit rough, buta acutally, not too complicated. What we intend to do is to use 4 ADCs (named A, B, C, D) rather than two, and to use ADCs that have a build-in 2 to 1 MUX, so it can either sample channel 1 or channel 2. The “1” channels, A-1, B-1, C-1 and D-1 are connected to a reference source that will allow analysis on ADC non-linearity, and the “2” channel, well, thats for the acutal X and Y signals.

The whole thing is connected to a CPU, which will require some kbytes of RAM and FFT/histogram processing powder, not an issue these days. It will acquire the digital representations Q'(X) and Q'(Y), and hold the non-linearity information for each of the ADCs (called “linearity tables”, LIN A trough LIN D) and use this data to calculate the corrected “ultra-linear” digital representations of the input voltages, Q(X), and Q(Y).

Don’t ask how I will calculate the non-linear correction coefficients. It’s filling piles of paper already, working through FFT and histrogram analysis, and might be some occupation for an upcoming rainy Saturday, to get this coded.

The sampling scheme will work with two steps: measuring a cal signal on A and C, and measuring X and Y on B and D; then, 2nd step, B and D cal signal, A and C – X and Y signal.

Next question, what is a suitable cal signal that can be samples to yield the non-linear properties. Well, as we are only interested in the relative non-linearities of the converters, there is more freedom to choose signals, e.g., a triangular signal, or a ramp function. But, as you might know, it’s not all that easy to generate a good linear ramp voltage – there capacitors involved, and opamp integrators, and we are talking low frequency here, lika a cal signal frequency of 10 Hz, and measurement periods of minutes, rather than seconds. This will make leakage current significant, and a lot of effort. And, we run the risk of introducing some second-order errors, so perferably, the correction scheme employed should acutally yield quantizer transfer functions of all ADCs that are as close to ideal linearity as possible.

So, what signal to use. The solution is simple – we need a perfectly clean sine wave. This is predictable with time, easy to handle numerically, and, fairly easy to generate. For reasons of filtering, preferably, noise and distortion of the signal should be minimal. But, how much is minimal?

We will treat the signals, cal, X, Y, as a dynamic signals (slow, but steadily changing, with R, see above, having certain very low frequency components that we want to extract).

Some numbers: say, we want to resolve 2 volts to about 1 µV, that’s a resolution of about 2 million counts, in bits, 21 significant bits of information. This corresponds to a dynamic range of about 20*log10(2^21)=127 dB. Cross-check against the AD7710 datasheet shows that this is possible, for both dynamic and essentially-DC input conditions (with effective resolution of about 22 bits at 10 Hz sample rate). By averaging, to 2.5 Hz, we might win 1 bit, and get about 23 bits of useful information. That’s all falling into place; actually, it might even be better to sample at a faster rate, and do more digital averaging – this needs to be figured out later.

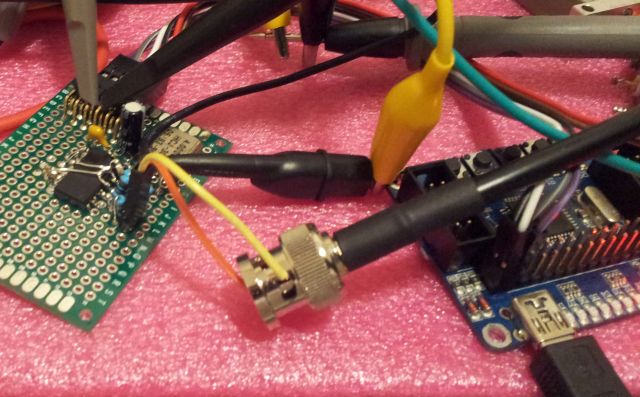

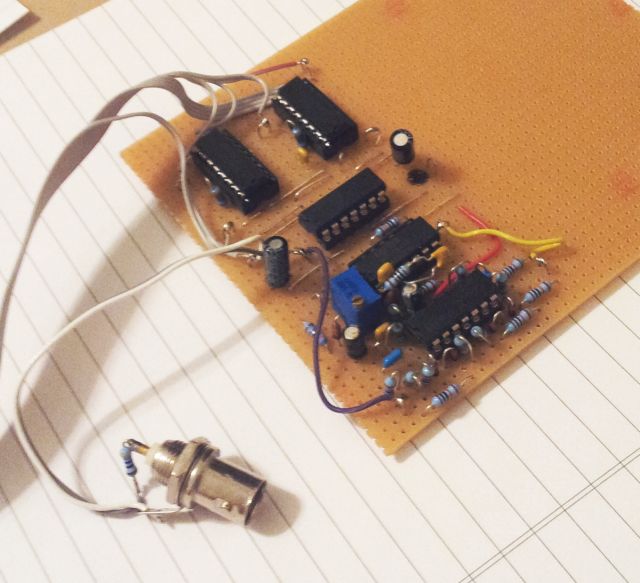

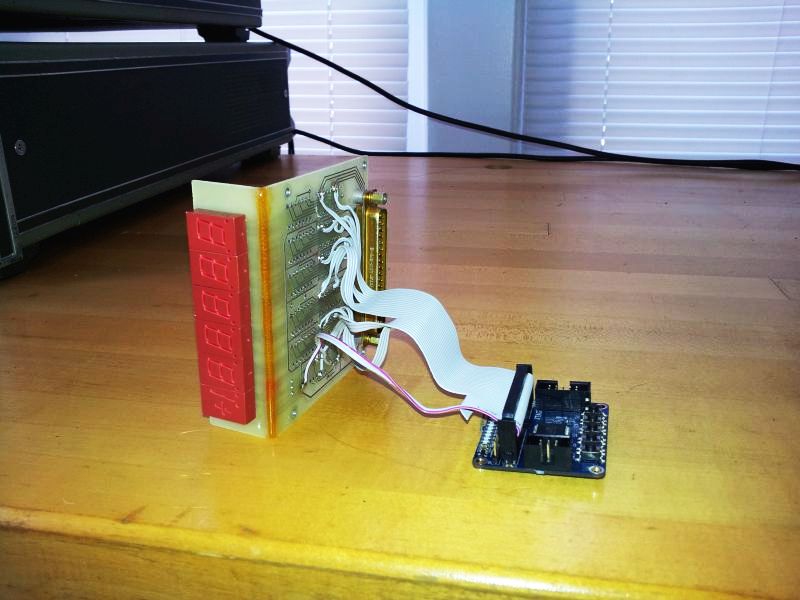

The ADC interfacing, at least the digital end, will be easy, just a few wires. The analog front end – also not too difficult, the AD7710 has a build in MUX, differential input, and the X and Y signals are available as low-impedance, very low noise signals. I might in fact put the 4 AD7710 in a little metal case, solder it all free-wire, and encapsulate it with a thermally conductive epoxy potting compound.

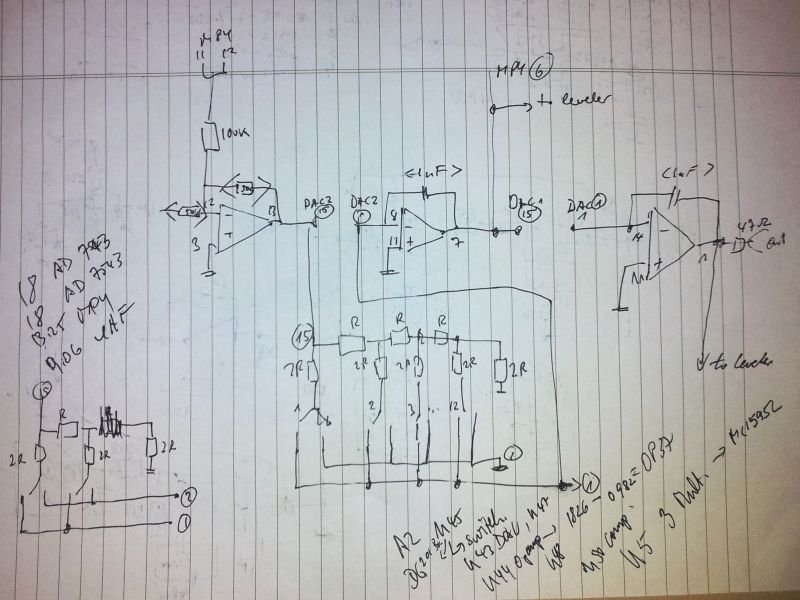

Next step will be to fabricate a low distortion cal source, of variable frequency. Frequency needs to be digitally settable, not too very accurate steps, but close enough, to keep it constant within drift and eventual changes of sampling rates/”integration times”.

Following above estimation, the distortion of this should be somewhere around -100 dB. It doesn’t need to be -130 dB, because some deviation of the transfer functions from ideal linearity will be acceptable in the given case – if anyone needs better linearity, just add a better signal source, keep the ADC setup thermostated -the limit will be only the stability of the non-linearity with time.

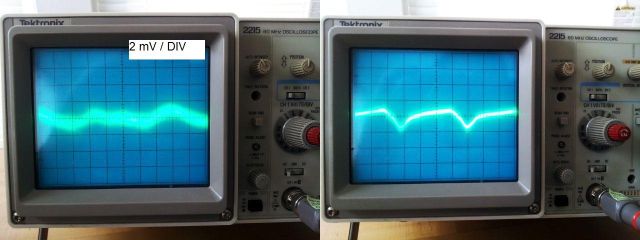

Well, -100 dB might be a bit tough to proof with the equipment I have around here, and with the relatively plain parts. Let’s see what is possible. And maybe build an improved version later.