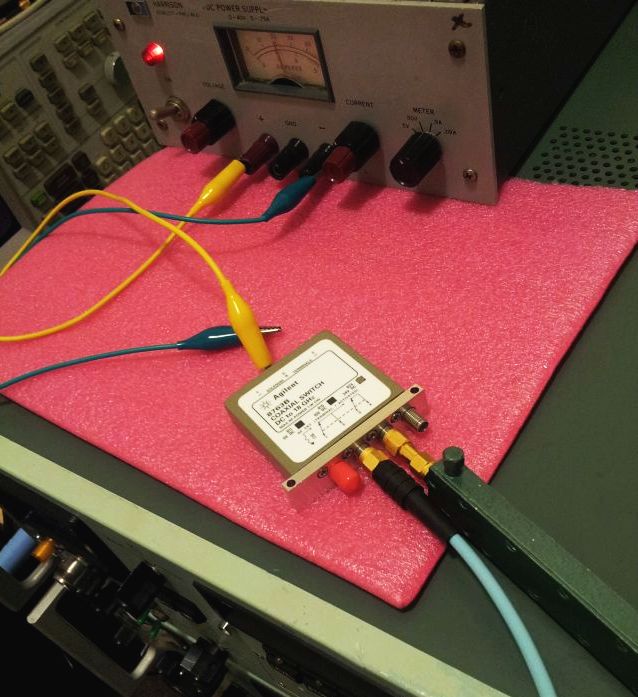

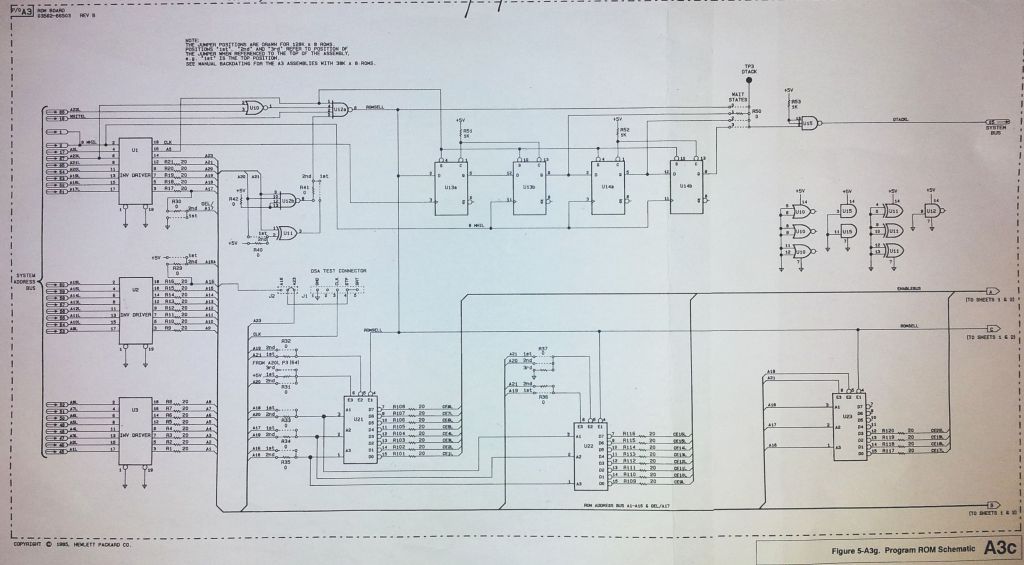

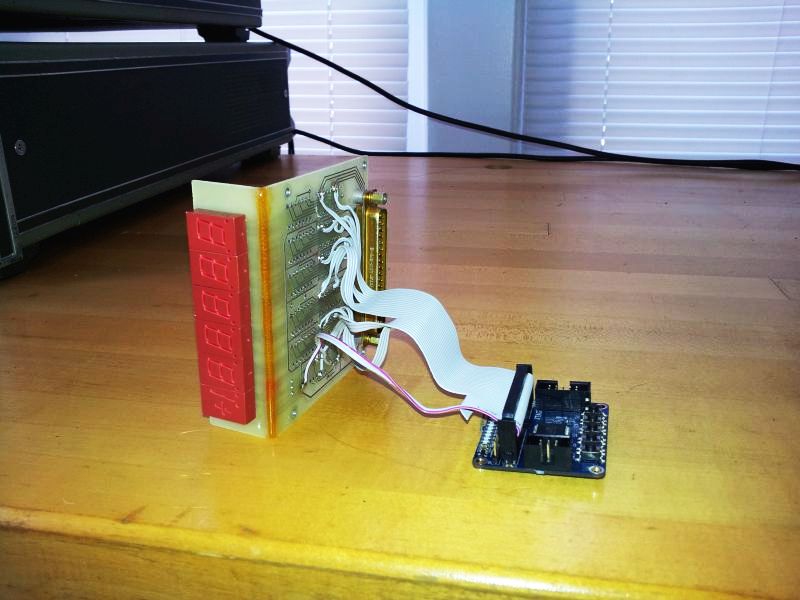

Some items of the mighty precision attenuator calibrator setup are still missing, like the automatic auto-zero/through calibration, and the adaption of the reflection bridge (see earlier post), but nevertheless, all parts are now in place to do some first real measurements (and generate, thanks to computer control, more data than anyone could have ever recorded manually, without getting close to nervous breakdown).

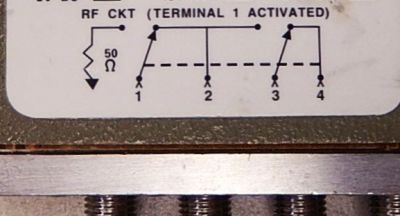

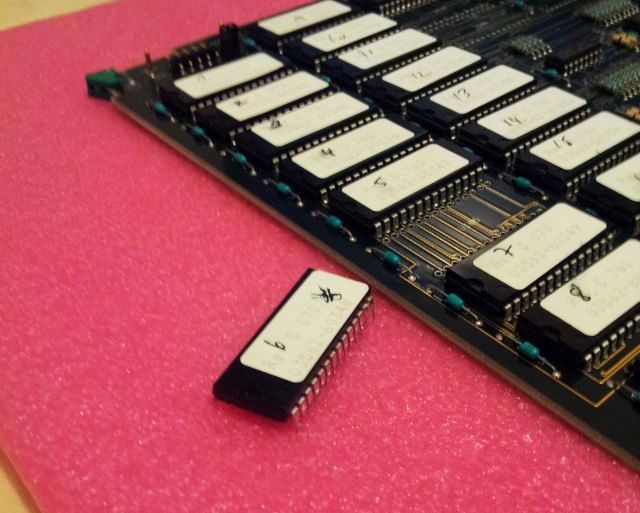

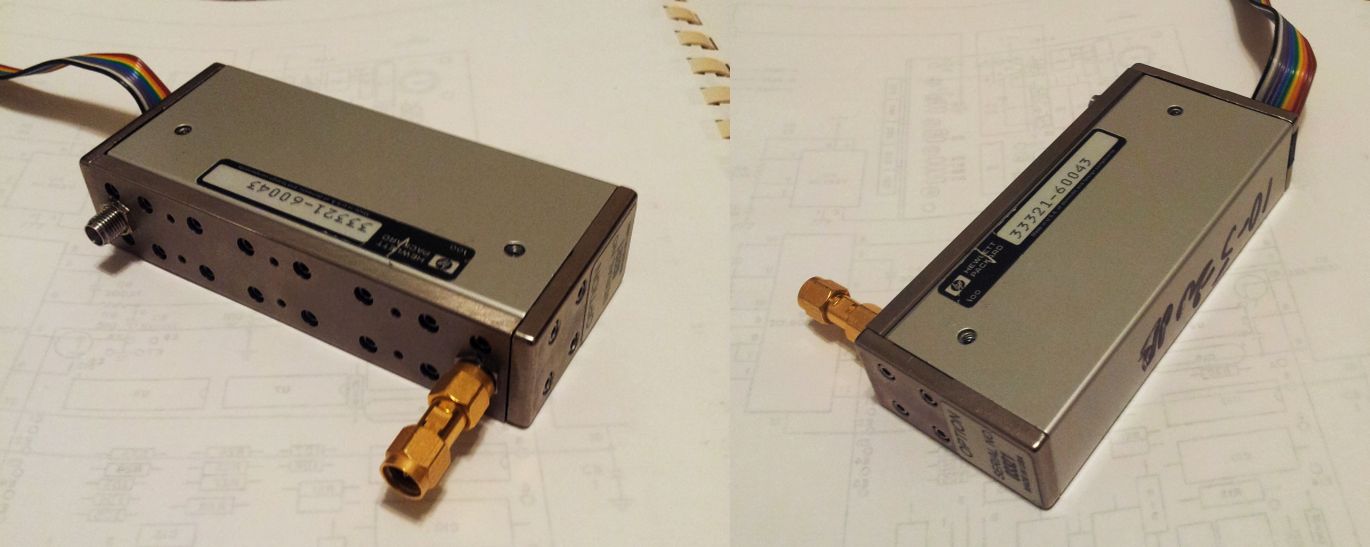

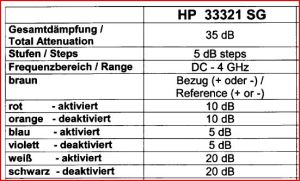

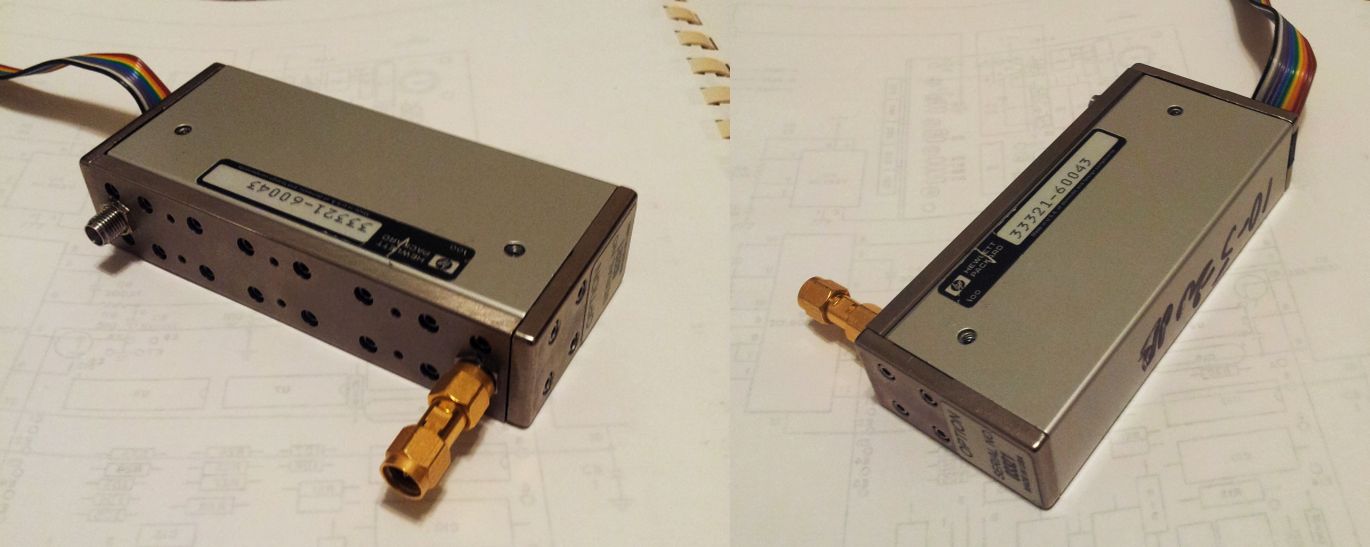

The device unter test (DUT). It is a HPAK 33321 SG step attenuator, 35 dB, in 5 dB steps – it is more or less a transmission line, with 3 resistor pads that can be individually switched in and out.

Also, note the SMA to SMA connector needed to get things connected vs. a through line. No allowance was made for this connector, it is a 18 GHz qualified precision part and will have very little loss.

As you can see, it is specified for 4 GHz operation – there are multiple models of these attenuators, both from Weinschel/Aeroflex and HP/Agilent/Keysight, up to 26.5 GHz or more. The 4 GHz models are relatively easily to come by, and others have claimed that these are actually quite usable up to higher frequencies. Let’s see.

While I don’t have exact specs for the 33321 SG model, there are similar models around, and typically, the numbers given by HP are +-0.2 dB at 10 dB, +-0.4 dB at 20, and +-0.5-0.7 in the 30-40 dB range. Repeatability about +-0.01 dB, which is quite impressive.

To be exact, we will be dealing with insertion loss here – not quite attenuation, but close, because no corrections have been made for any return losses (due to the SWR of the receiver and of the DUT, which might also change with attenuation setting).

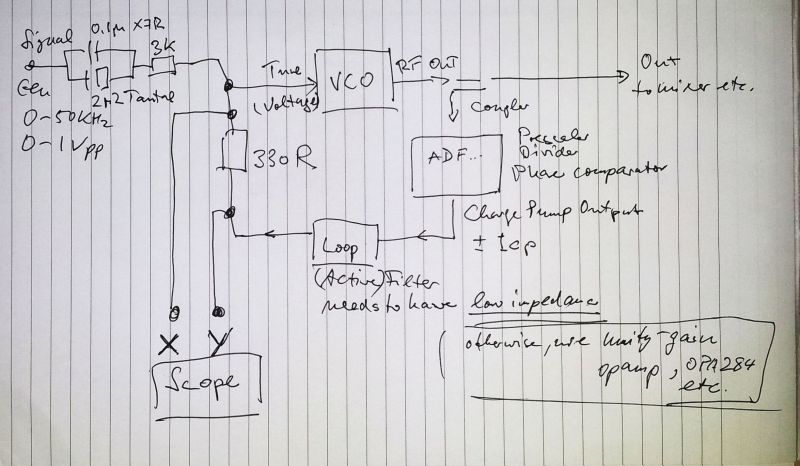

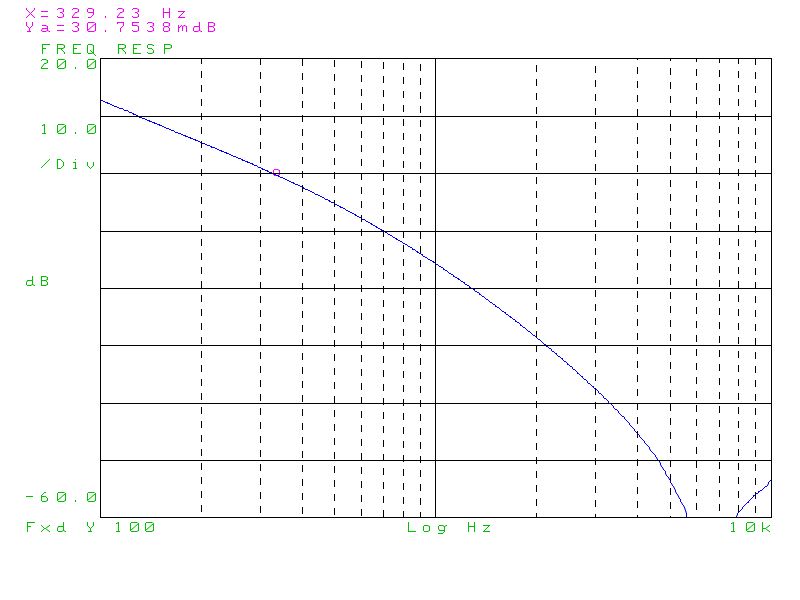

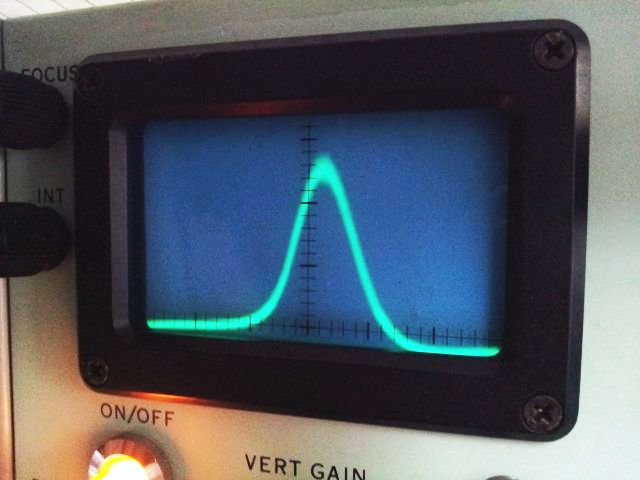

Now, the test:

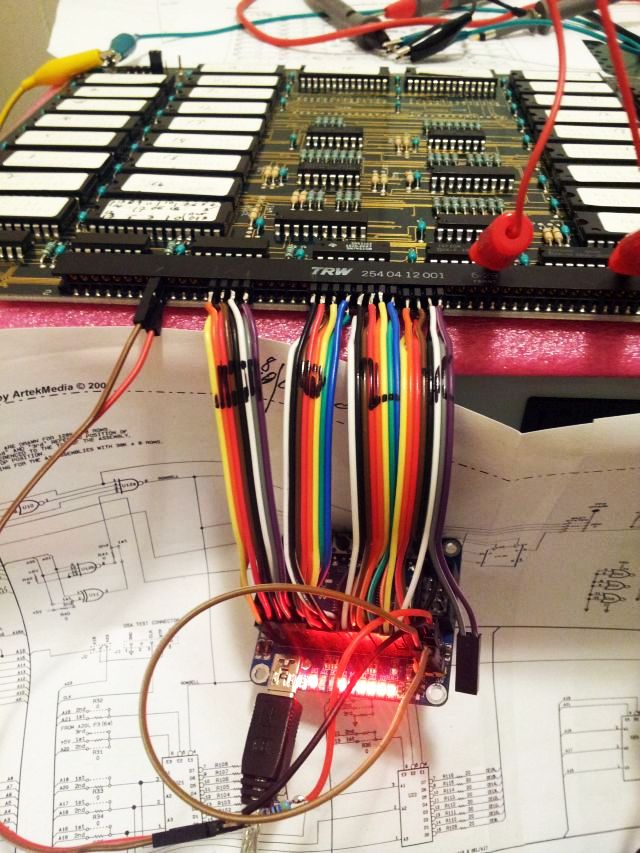

Step (1) – the system was calibrated without the DUT, just with the cables (from generator and to receiver) directly coupled (“through” calibration)

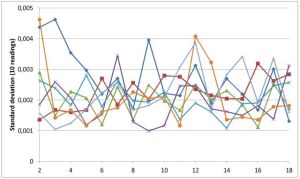

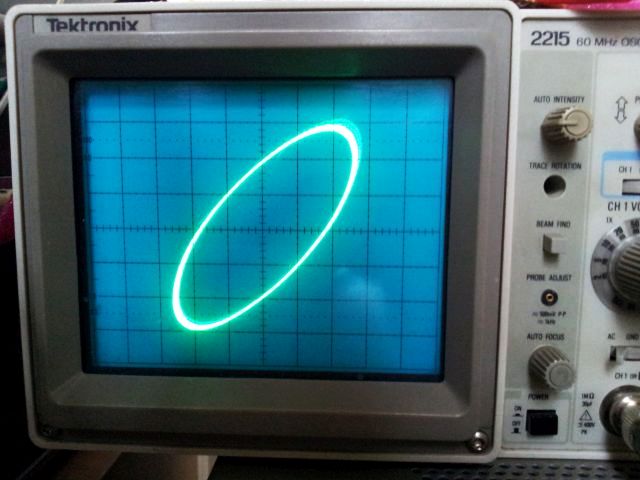

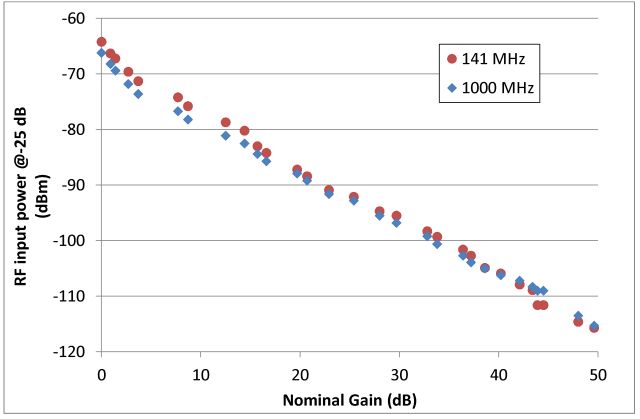

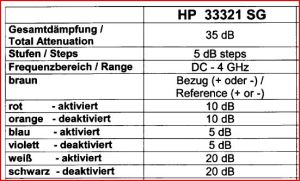

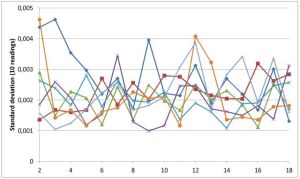

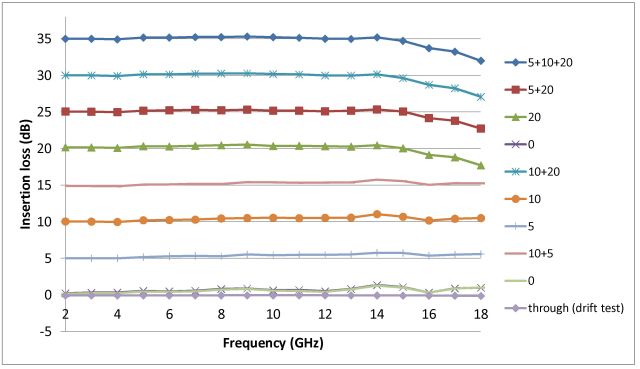

Step (2) – the attenuator was inserted, and tested at all the steps from 0 to 35 dB, 0 dB was measured twice. For all steps, 10 readings were taken, 1 per second, and averaged. Standard deviations are very small, showing the excellent short-term stability of the setup:

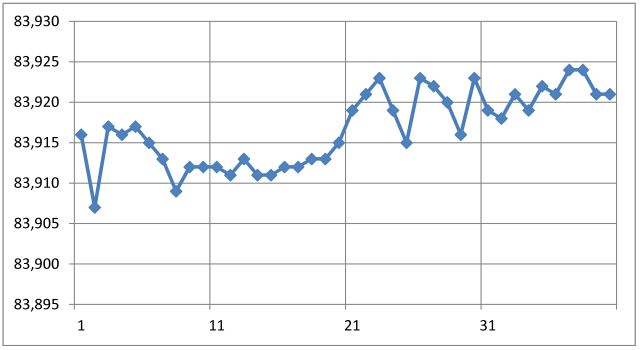

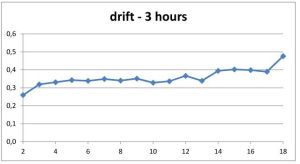

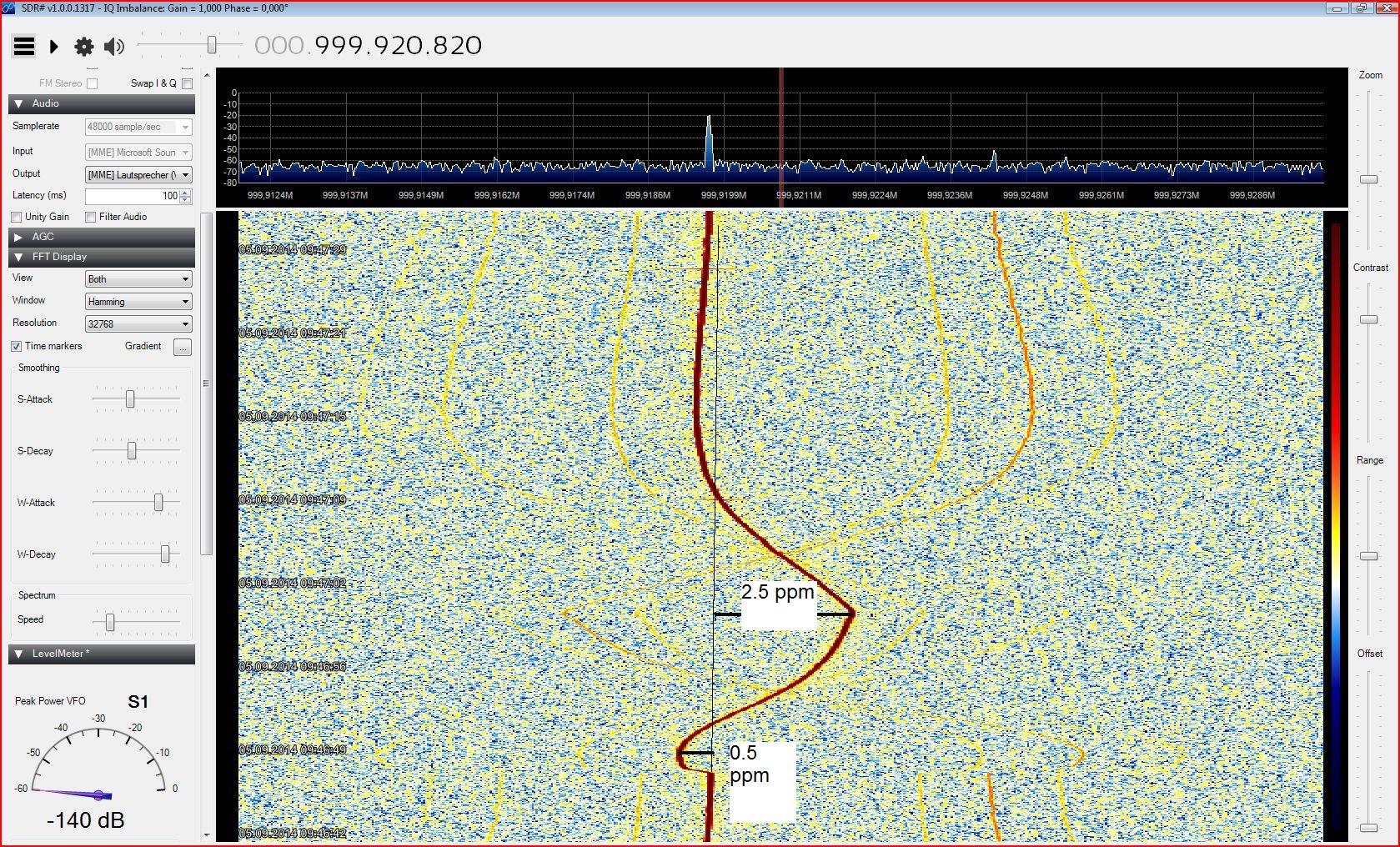

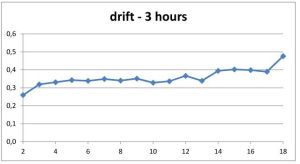

Step (3) – again, a through calibration. The measurements took about 3 hours – the drift was small, and distributed linearly with time over the measurements. Drift is pretty much independent of frequency. Later, there will be a drift correction anyway, by the yet-to-be-implemented auto-calibration feature.

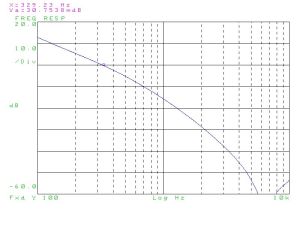

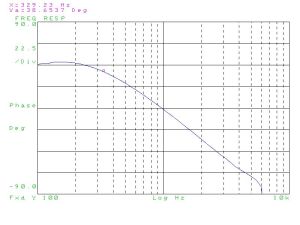

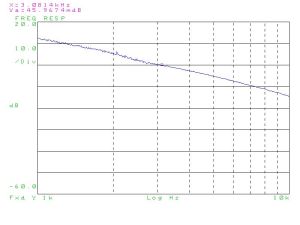

Drift – 3 hours; about 0.1 dB absolute per hour.

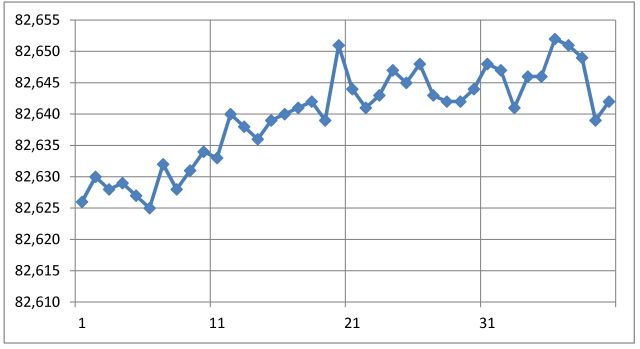

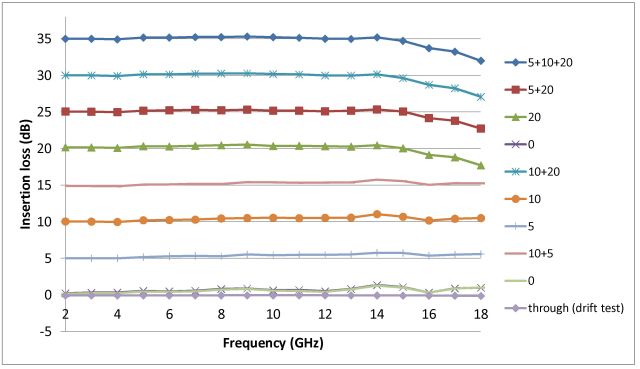

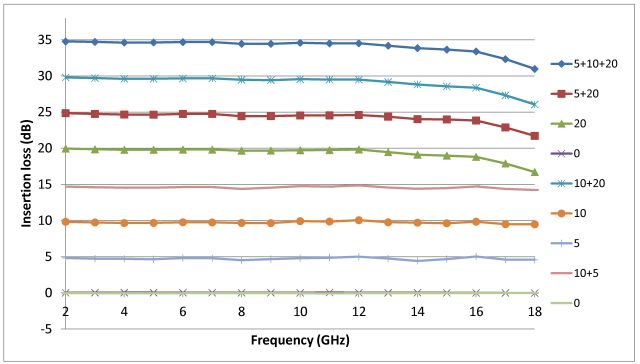

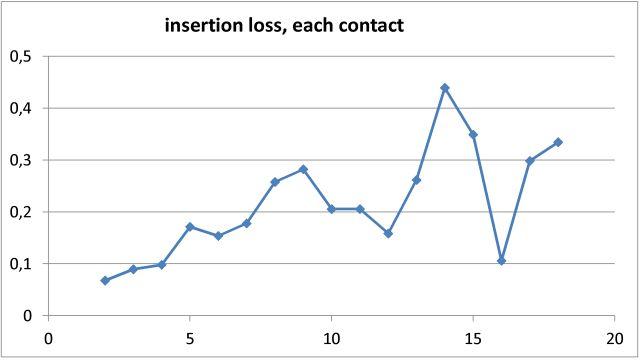

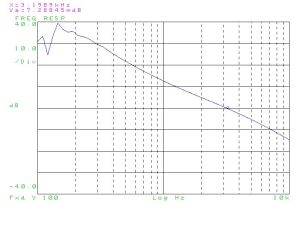

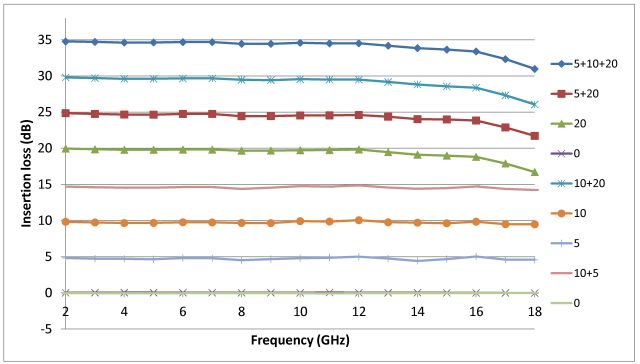

Insertion loss – at all the various steps, relative to “through” connection

Insertion loss – relative to “0 dB” setting. This is relevant for most of the practical cases, where the 0 dB values are stored in a calibration ROM, and the levels corrected accordingly. Repeatability of the 0 dB setting was also checked – standard deviation is about 0.04 dB, but might be much better short-term (more like the 0.01 dB claimed in the datasheet). However, keep in mind, 0.04 dB at 10-18 GHz is not more than a few mm of cable movement, or a little bit more or less torque on a connector.

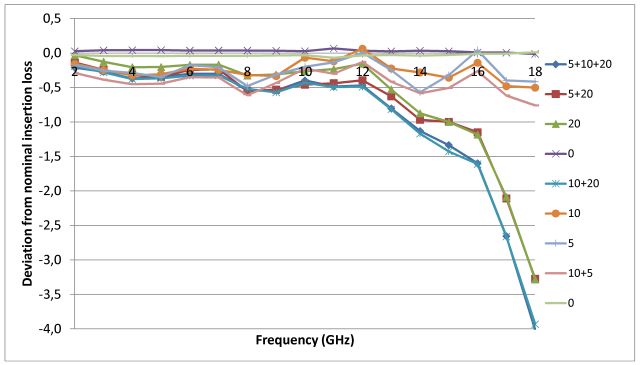

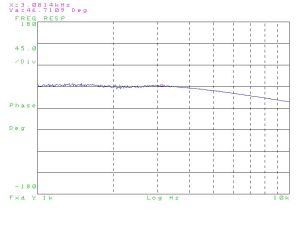

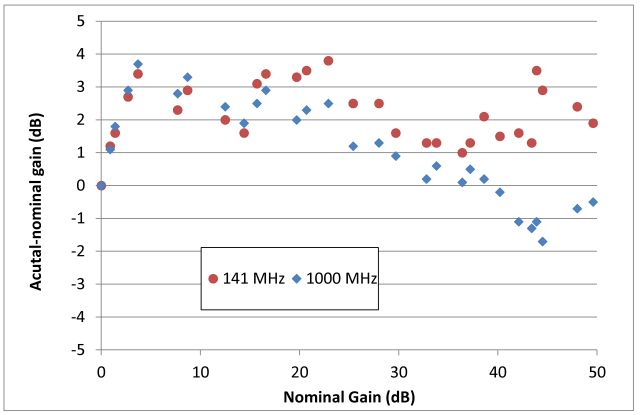

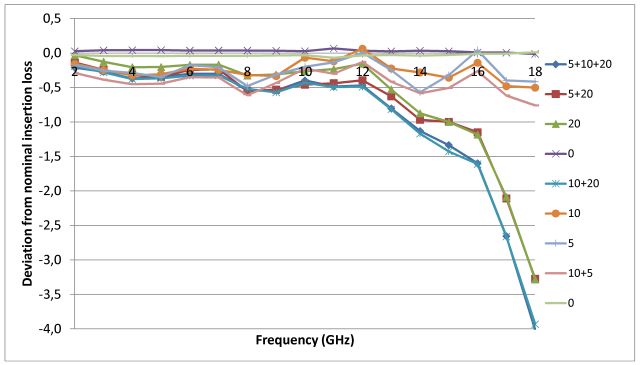

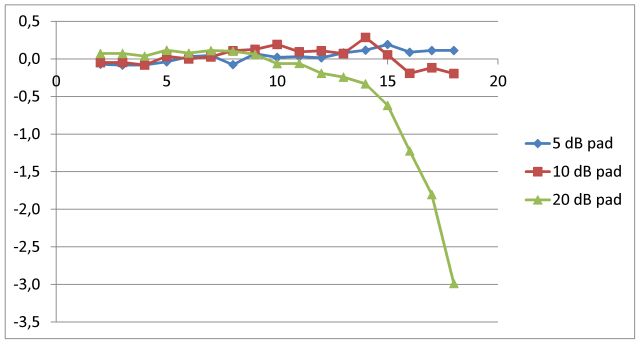

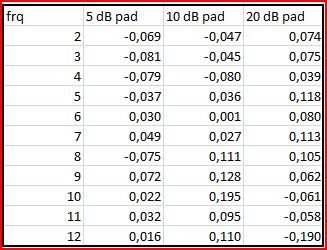

Deviation of 0 dB corrected loss from the nominal values (5-10-15-20-25-30-35 dB steps)

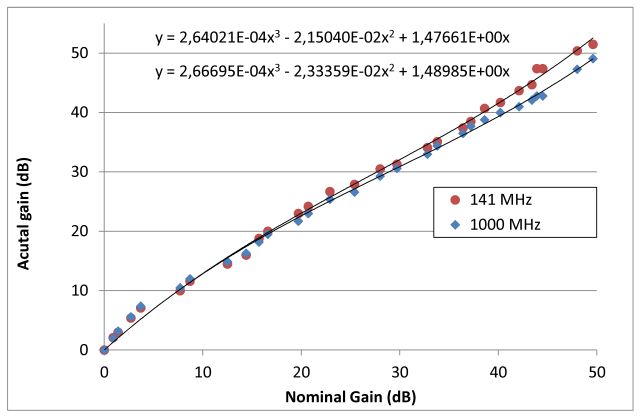

As you can see, the attenuator works great well above the 4 GHz, easily to 12 GHz. Still usable up to 18, with some error. This error seems to mainly come from the 20 dB pad. Rather than relying on just the 20 dB pad measurement, some maths were done on the data to determine the insertion loss difference (attenuator switched in, vs. switched out), for each off the pads, e.g., for the 20 dB pad, by subtractions of these measurements:

(1) 20 dB in, 5 and 10 out; vs 0 dB

(2) 5 and 20 in, vs 5

(3) 10 and 20 in, vs 10

So there are actually 3 combinations for each pad that allow determiation of the actual insertion loss, for each individual pad. Furthermore, this utilizes the 1295 received at different ranges of the (bolometer) log amplifier, and with different IF attenuators inserted – and will average out any slight errors of the log amp, and calibration errors of the IF step attenuators of the 1295. For even more cancelation, the source power could be varied, but fair enough.

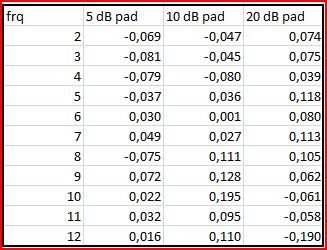

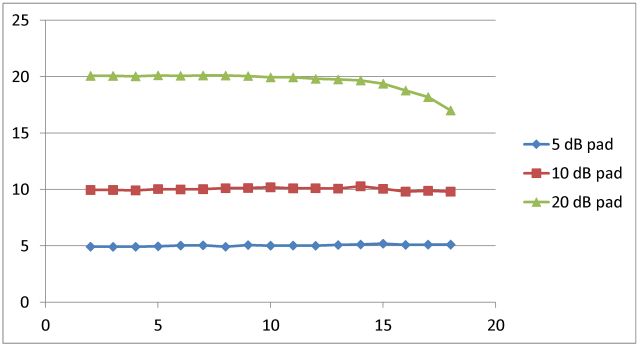

Results of a lot of (computerized) number crunching:

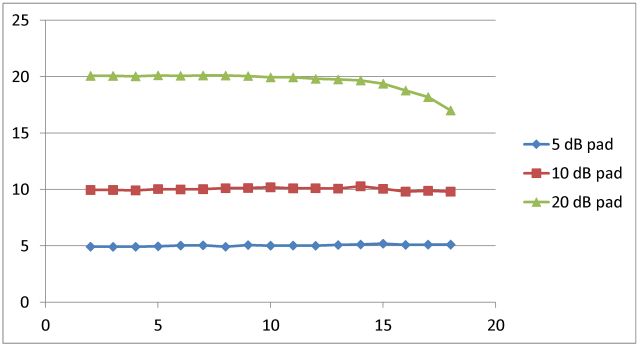

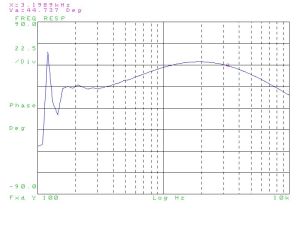

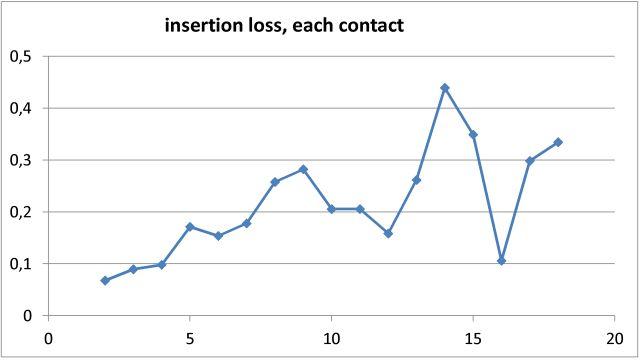

Insertion loss difference in vs. out, for each pad

The 5 and 10 dB pads are performing great, the 20 dB pad – a bit less. Well, there must be a way to tune this a bit – but don’t have a cleanroom here, and the fixtures, to scratch a bit of resistive mass from the pad, at certain places, etc. Wonder how they do this at the factory, and if in fact there is some manual tuning, at least for the higher frequency units.

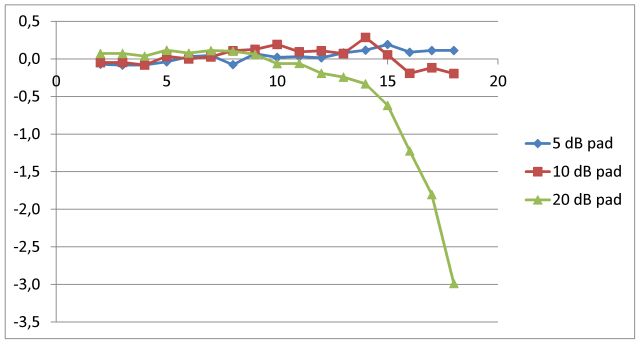

Deviation from nominal, for each pad

This is really a quite striking level of accuracy – much better than specification, and also indicates the level of precision already achievable with the still temporary attenuation calibration setup. Up to 12 GHz, no issues at all.

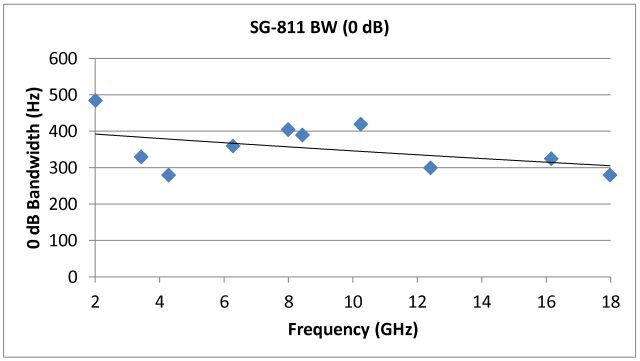

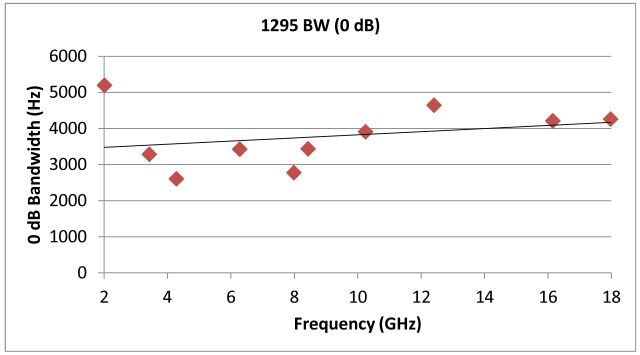

The 0 dB loss – some might be in the connectors, some in the transmission lines, some in the “through” switches of the 3 attenuators. Simply assuming that there aren’t any losses in the connectors and transmission lines, this is the loss per attenuator switch, when in “through”=”pad switched out” position.

All in all, the best way to use these attenuators obviously is to very accurately measure the 0 dB insertion loss, on a pretty narrowly spaced frequency scale. For the attenuator pads, these are best measured by recording values at various attenuations, and polynomial fits give very good approximation, without the need for a lot of density on the frequency scale, and seem to be merely additive, with little cross-correlation errors.

Sure, such things can all be analyzed with much more maths involved, but I doubt it will impact much the application-relevant aspects, and would be rather just a numerical exercise.