Designing a stable PLL is not really a big challenge, with all the simulation tools available, and after you have mastered some basic experiments with the 4046 chip, or similar circuits. For PLL simulation software, I suggest to look at ADIsimPLL, available free of charge, from Analog Devices.

However, stable doesn’t necessarily mean wideband, and exhibiting similar characteristics over a full 2 to 18 GHz band. That’s what we want to achieve here.

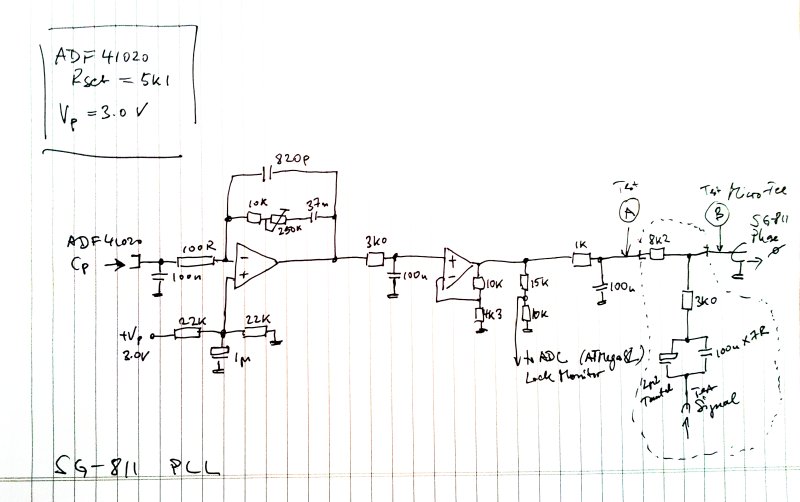

First some targets – after reviewing the circuits of the Micro-Tel SG-811/1295, and looking at the stability of the build-in YIGs, I figured that a good PLL bandwidth for this system would be somewhere in the 200-500 Hz region. This would still allow to correct for some mains-induced frequency fluctuations (50/60 Hz), and the frequencies are well below the 25 kHz phase detector frequency used for the ADF41020. Furthermore, the bandwidth should be reasonably stable of the full range of frequencies, with no need to use multiple loop filters, or troublesome switchable capacitors/variable gain amplifiers – all should be operated from a single-ended 15V power supply, to provide 0-10 V for the Micro-Tel 1295, and 0-3 V for the SG-811, from a single little board.

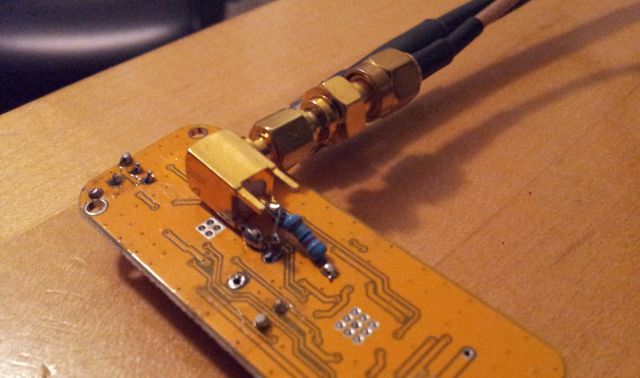

With this in mind, an OPA284 rail-to-rail precision amplifier (low noise, 4 MHz BW, can drive +-6.5 mA) was selected as the active part, and some capacitors (only use good quality capacitors, polymer dielectric, or stable ceramic capacitors, NPO) and resistors put together. There is only one adjustment, the damping resistor in the feedback loop.

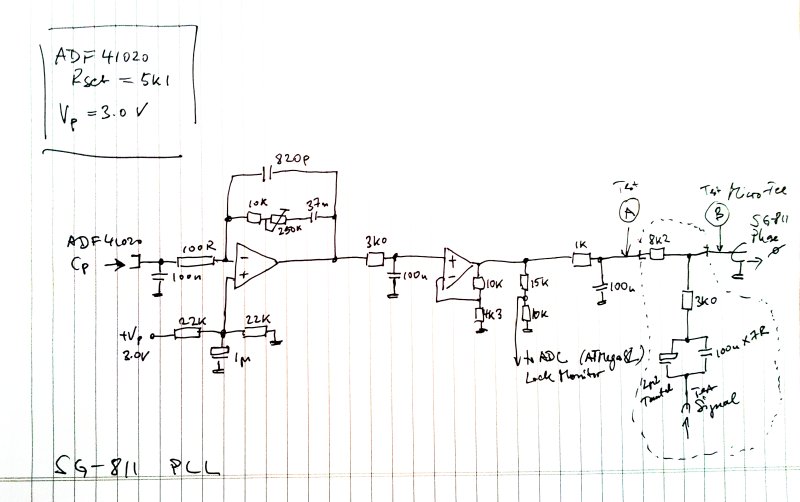

Sketch of the schematic

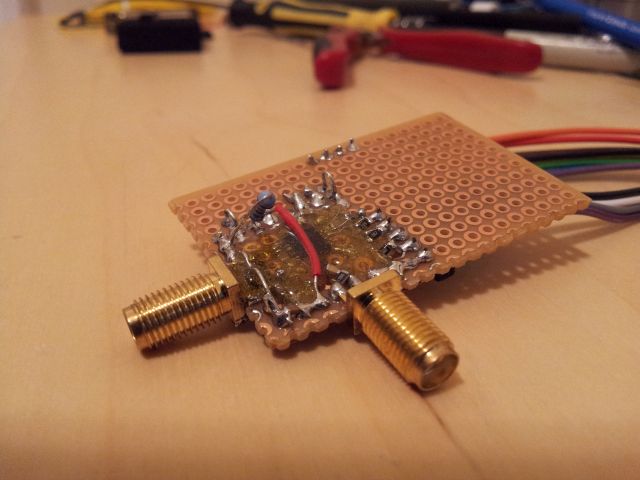

How to figure out the loop characteristics? Many pages have been written about this, determining open-loop gains and phase margins, etc., but how to approach this in practice, one you have done the calculations and figured out a setup that basically works? This is where the extra resistor and the two test points (A, B, see schematic) come into play. The resistor close to the output (8k2, this is just a temporary part, only inserted during test – bridged with a piece of view during normal operation) is used to isolate the loop output, from the SG-811 phase lock input (which is nothing else than a heavy VCO=voltage controlled oscillator). A few extra parts are also connected to feed a test signal to the VCO, in addition to the loop filter output voltage.

This test port is intended to disturb the PLL just a bit, without causing loss of phase lock, and measure the response. Such work is best done with a dynamic signal analyzer – I’m using a HPAK 3562a, not because it is the latest model, but because that’s what I have around here in my temporary workshop. It had the old CRT replaced by a nice color LCD screen, and it features a very acceptable noise floor, and gain/phases analysis.

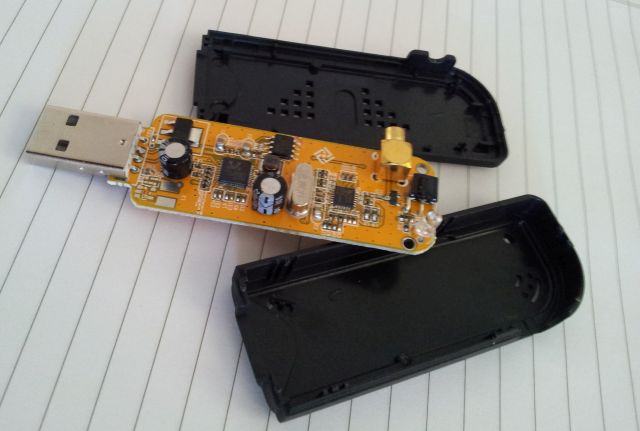

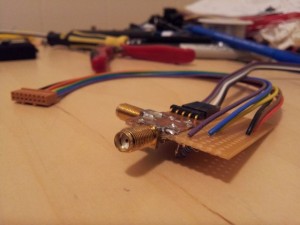

The test setup (please excuse the mess, not too much empty bench space around here)

Now we just need to work through various frequencies and settings, to better understand the characteristics of the system.

To cover all the YIGs and bands of the SG-811 (which might have unknown variations in tuning sensitivity, noise, etc.), frequencies around 2, 6, 10, 12.5 and 17.5 GHz were chosen for the test (exact values can be found in the worksheet, better not to use even values, e.g., 2.0000 GHz, but to exercise the divider circuits – to see if there are any spurs).

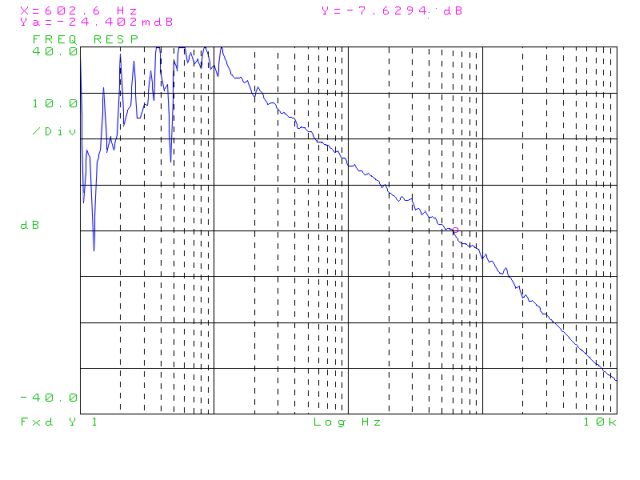

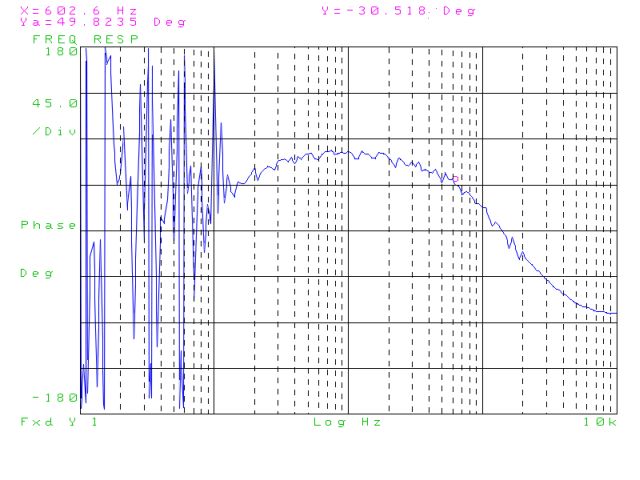

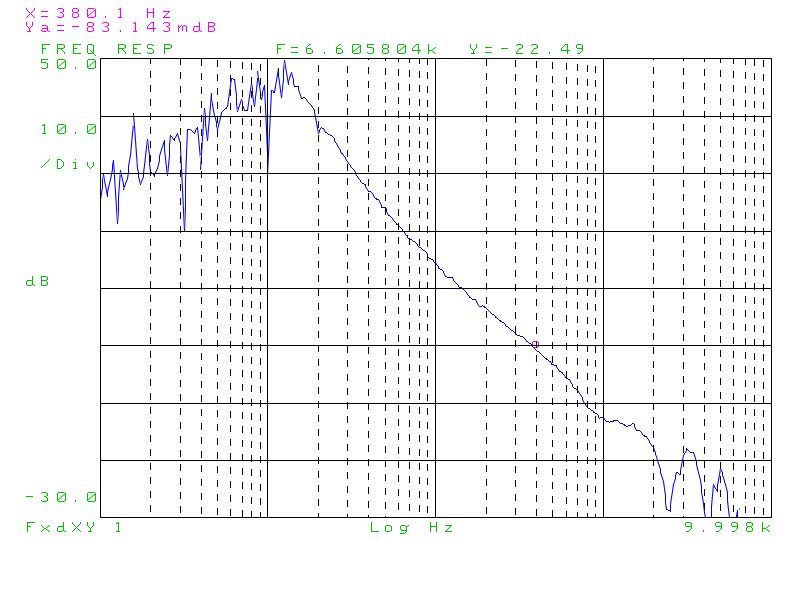

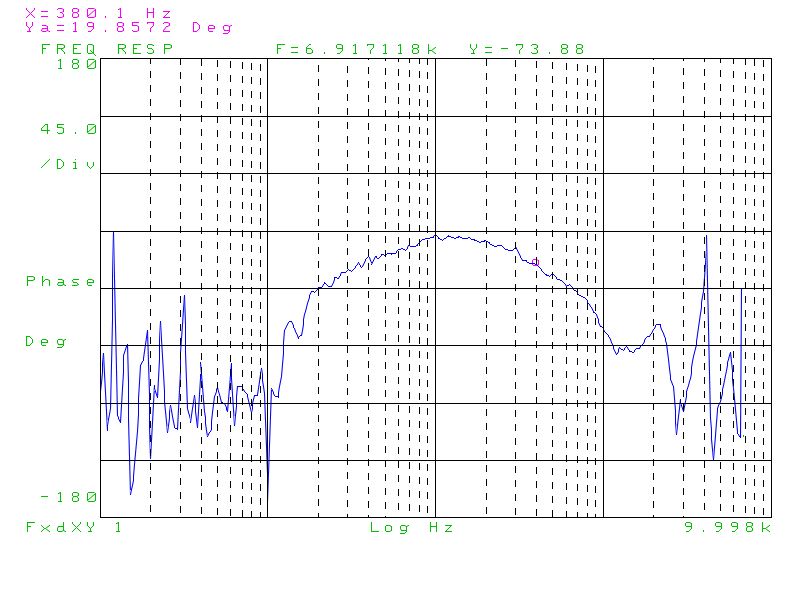

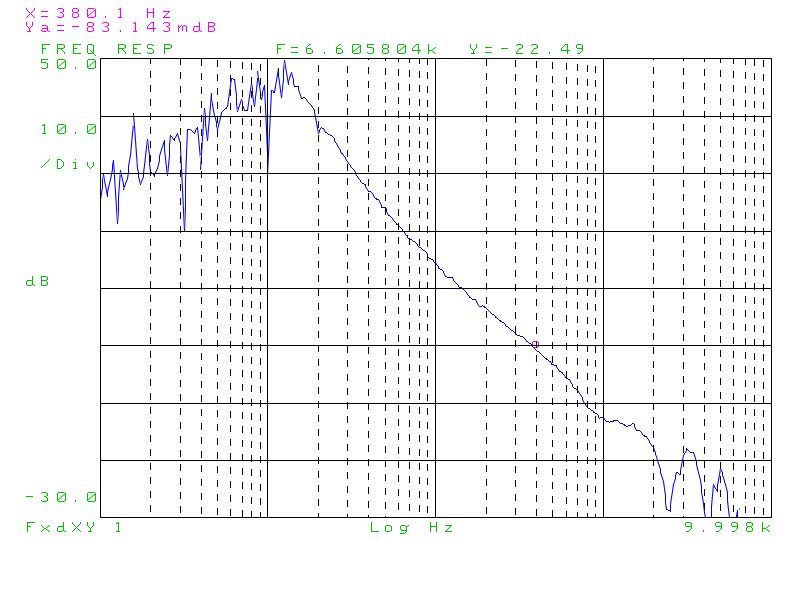

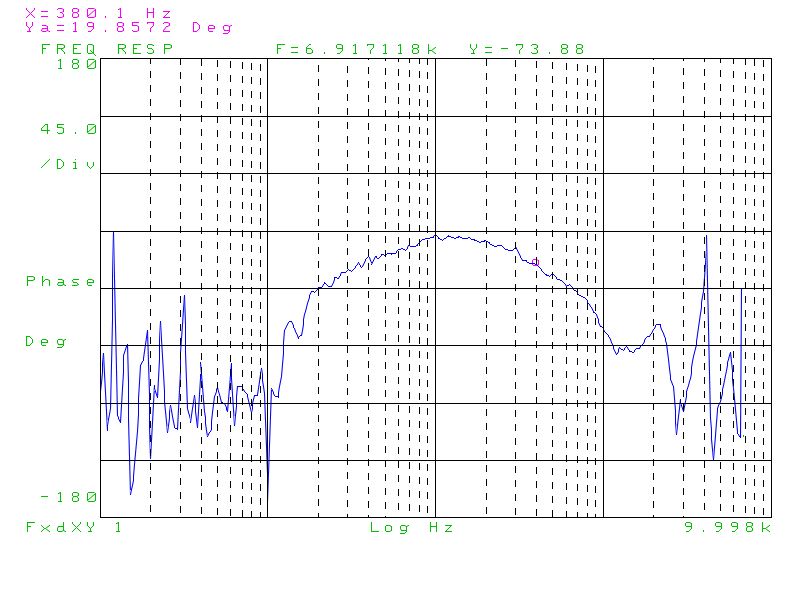

At each frequency, magnitude and phase response was collected, examples:

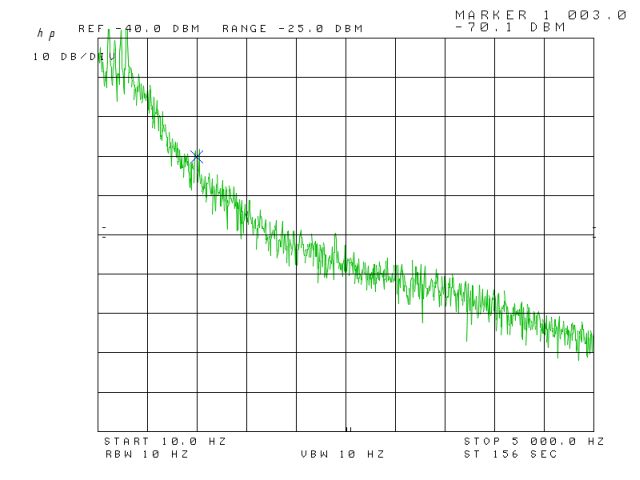

Gain (disregard the unstable response below 10 Hz, just an artifact)

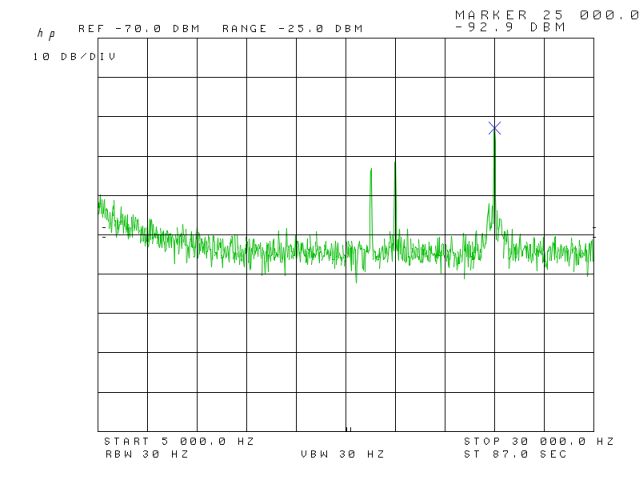

Phase

The interesting point is the 0 dB crossing of the gain trace – the unity gain bandwidth. This is determined for each test condition, and then the corresponding phase is obtained from the phase plot. In this example, BW_0dB is about 380 Hz, with about 20 degrees phase. Why is it so important? Simply because we need to keep this phase gap (of the A and B signals) well above 0 degrees, otherwise, the loop will become unstable-oscillate-massive phase noise of the generator will result.

Some call this the phase margin, so do I, although the whole discussion about gain and phase margins is typically centered around open-loop system, whereas we are dealing with a closed loop here. Fair enough.

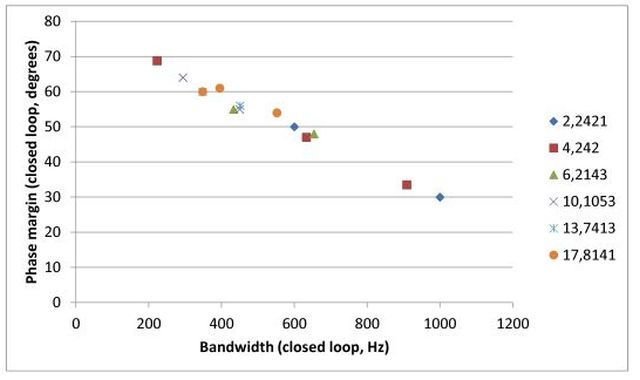

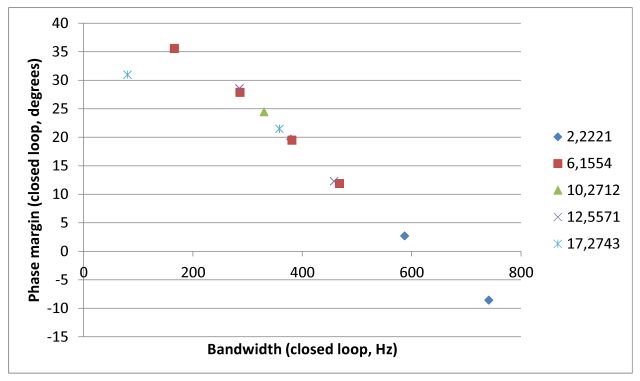

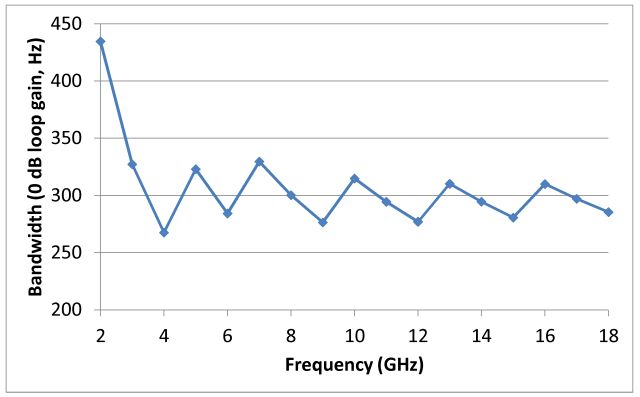

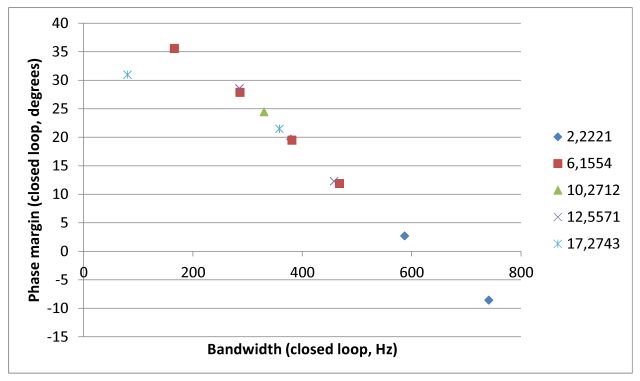

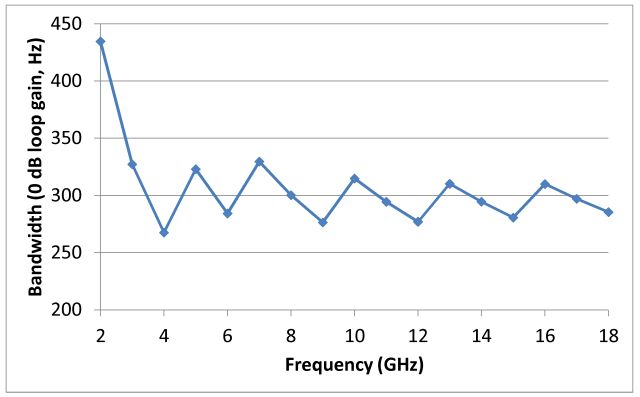

Now, after some measurements, and number crunching, the results:

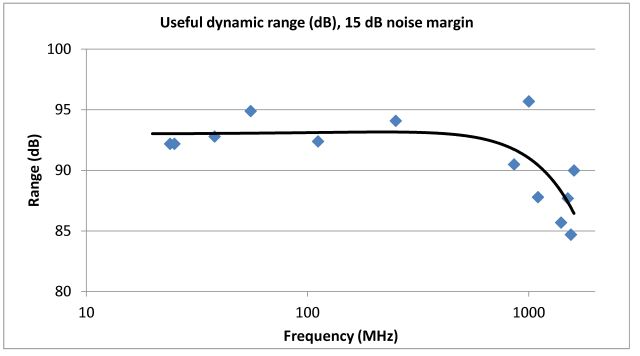

Phase vs. BW, at various frequencies

-you can see, the phase margin is virtually independent of frequency, and purely a function of bandwidth. So we can limit all further discussion to bandwidth, and don’t need to worry about phase margin separately. It is also clear from this diagram that we should better stay in the 250-300 Hz bandwidth region, for the given filter, to keep the phase margin above 25 degrees, which is a reasonable value.

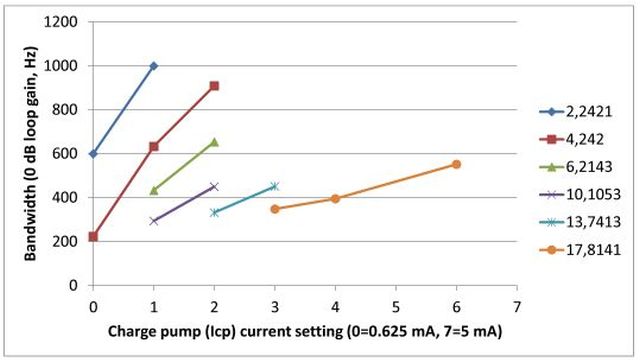

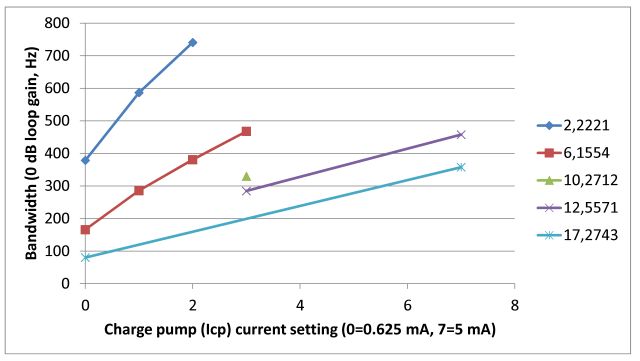

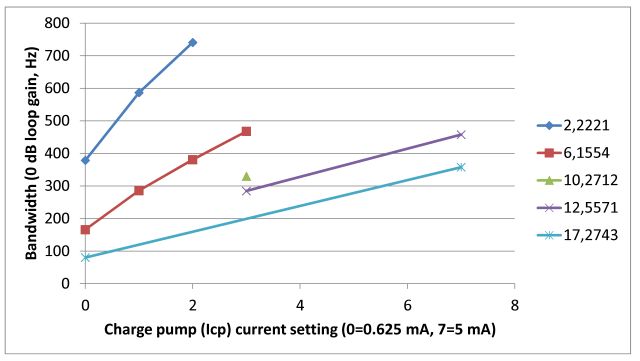

Now, how to keep the bandwidth stable with all the frequencies and YIGs/SG-811 bands and sensitivities changing? Fortunately, the ADF41020 has a nice build-in function: the charge pump current can be set in 8 steps (0 to 7), from 0.625 to 5 mA (for a 5k1 reference resistor) – and setting the charge pump current (Icp) is not much else than changing the gain of the loop filter. The gain, in turn, will change the 0 dB bandwidth in a fairly linear fashion. Note: typically, the adjustable charge pump current is used to improve locking speed – at wider bandwidth, and mainly, for fixed-frequency applications – but is is also a very useful feature to keep bandwidth stable, for PLL circuits that need to cover a wide range of frequencies, like in the case of the SG-811.

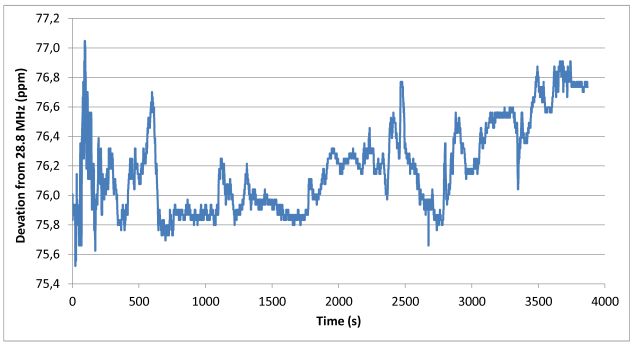

The next result – bandwidth vs. Icp setpoint

-looking at this diagram, the bandwidth is not only a function of Icp, but also a function of frequency. For the larger frequencies, the bandwidth is much lower. Some calculations, and it turns out that the product of bandwidth, multiplied with frequency to the power of 0.7 (a bit more than the squareroot) is a good parameter that gives an almost linear vs. Icp (see worksheet, if interested).

adf41020 pll bw phase margin

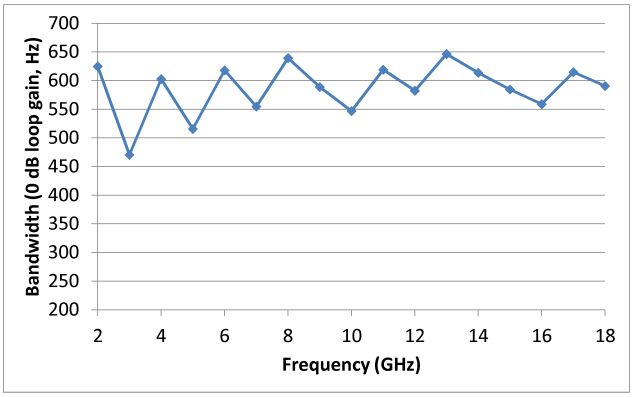

After all the measurements, things are now pretty clear – if we set the Icp current right, BW can be kept stable, over almost the full range, without any extra parts and switches, and about 300 Hz seems to be a reasonable compromise of PLL speed and stability.

Estimated PLL bandwidth (0 dB), using the Icp current adjustment of the ADF41020

At the lowest frequencies (2 GHz range), the BW is found a bit larger than desired, but still, the loop still has 20 degrees margin.

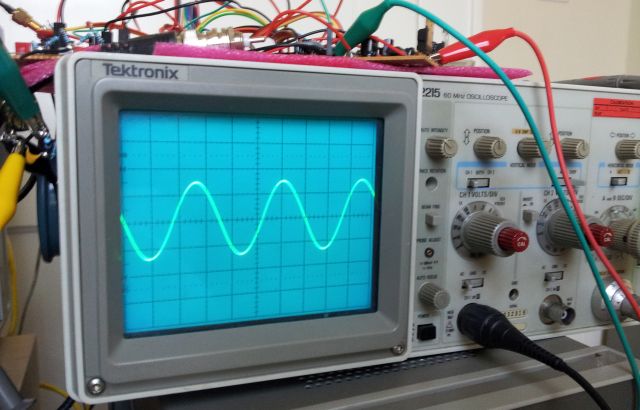

Well, with all the phase margins and uncertainties, is the loop really stable enough? To check this out, what is typically done is to first try a few odd frequencies, at the start, end and in the middle of each band and monitor the VCO control voltage with a scope, for any oscillations or otherwise strange behavior. Then try a few small frequency steps, and see how the loop settles. This all went without any issues.

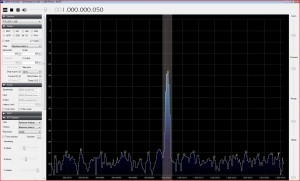

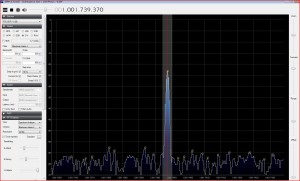

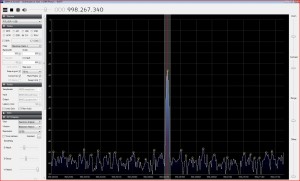

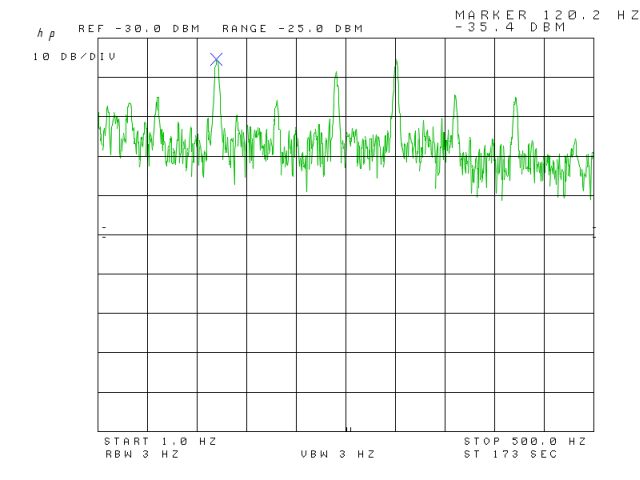

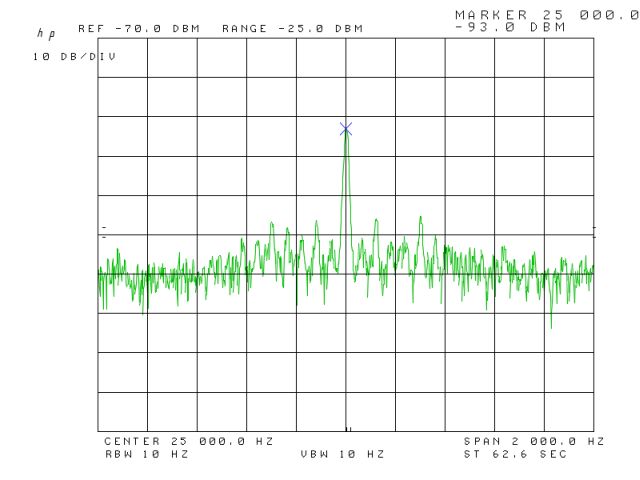

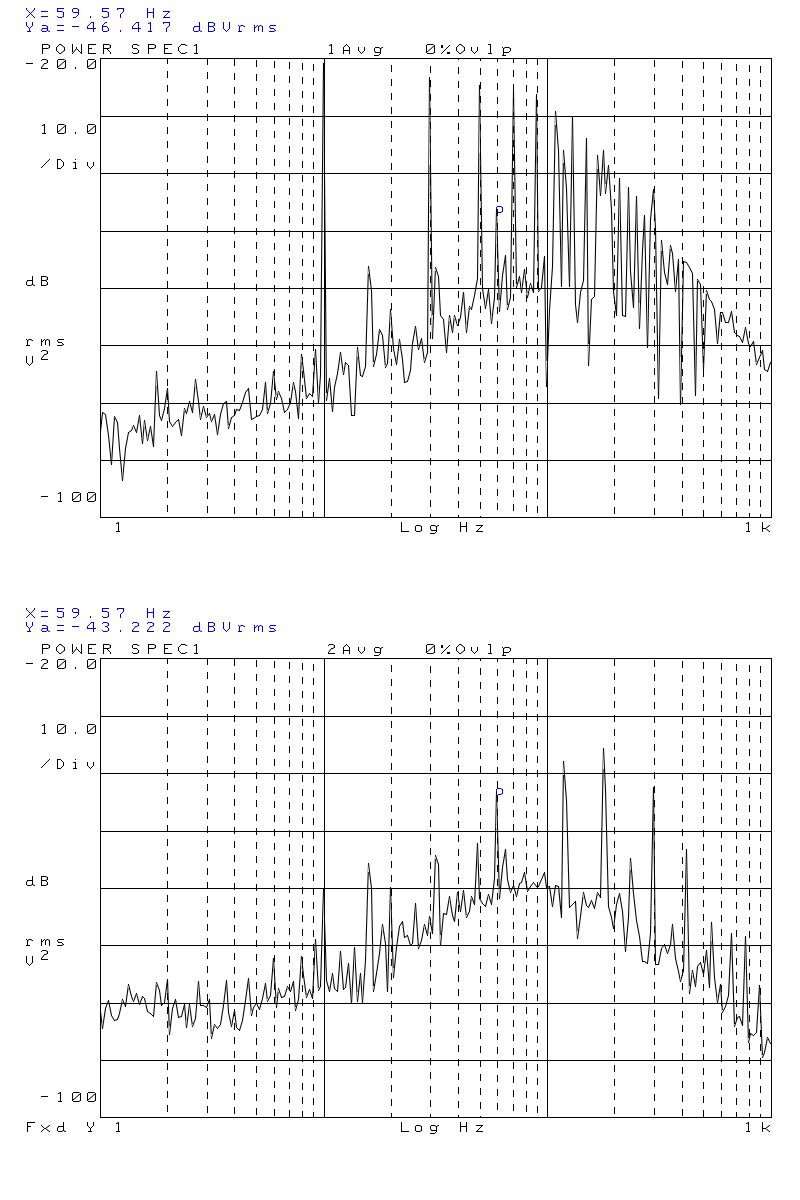

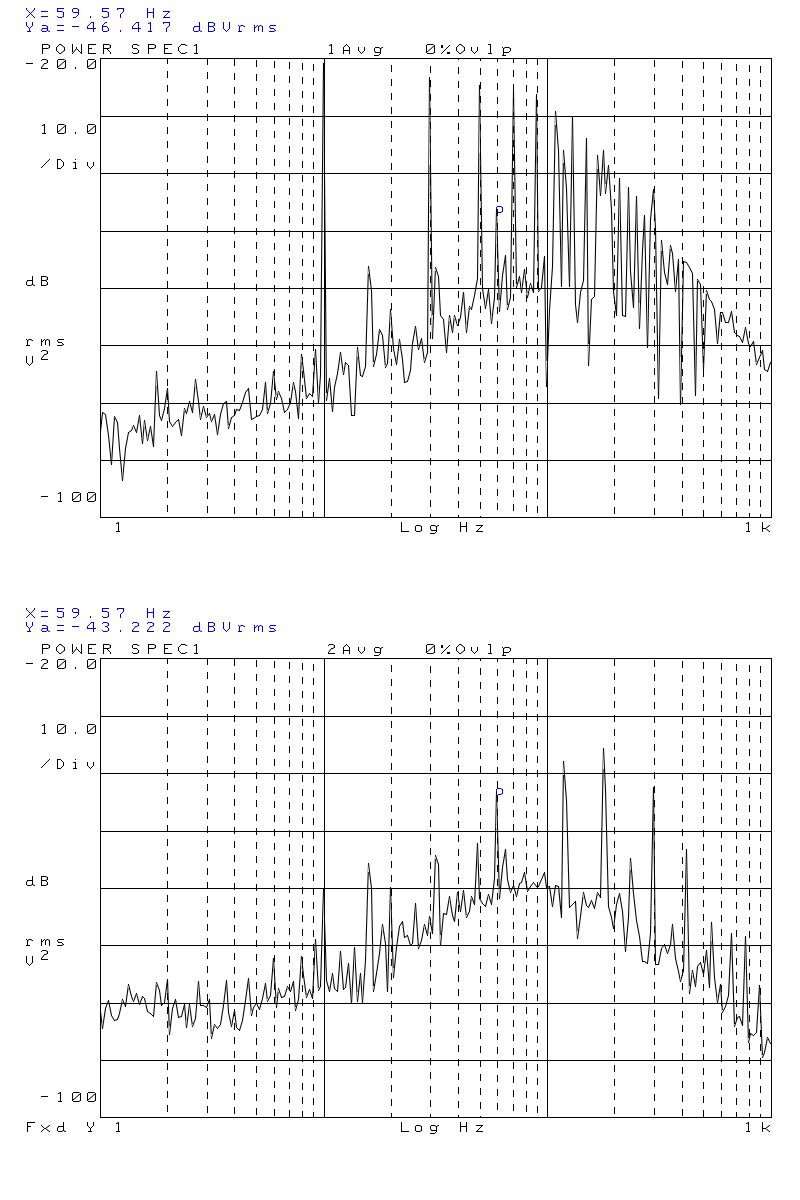

Still, to be sure, especially close to 2 GHz (increased bandwidth), a test was performed by injecting a 100 mV (nominal) squarewave, 10 Hz, via the test port mentioned above. The loop output spectra showed that this worked, and that the 10 Hz contribution is significant, while still not swamping everything else and driving the loop out of lock right away.

Power spectra with test signal on (upper diagram), and off (lower diagram).

There are some 60 Hz/harmonic 60 Hz spurs, mainly due to coupling of 60 Hz to the coarse tune line, which is just a plain coax cable that doesn’t provide any good shielding vs. 60 Hz (or 50 Hz, in Europe) interference.

Needless to say, the PLL will not stop working right away when the phase hits 0 deg at the 0 dB point (see above, phase margin vs. bandwidth plot – even at negative phase, measurement was still possible – as long as the amplitude of the test signal is kept small).

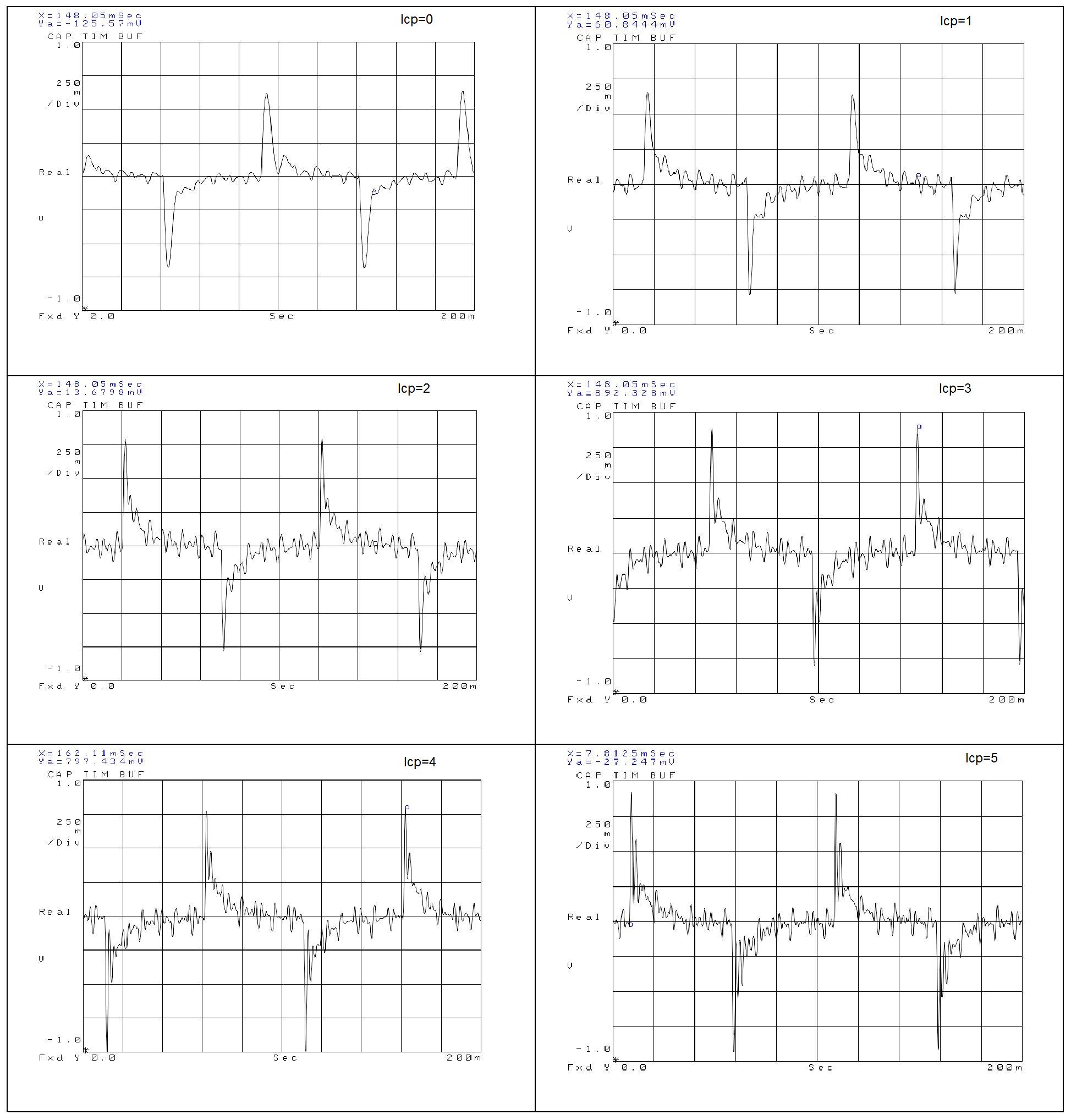

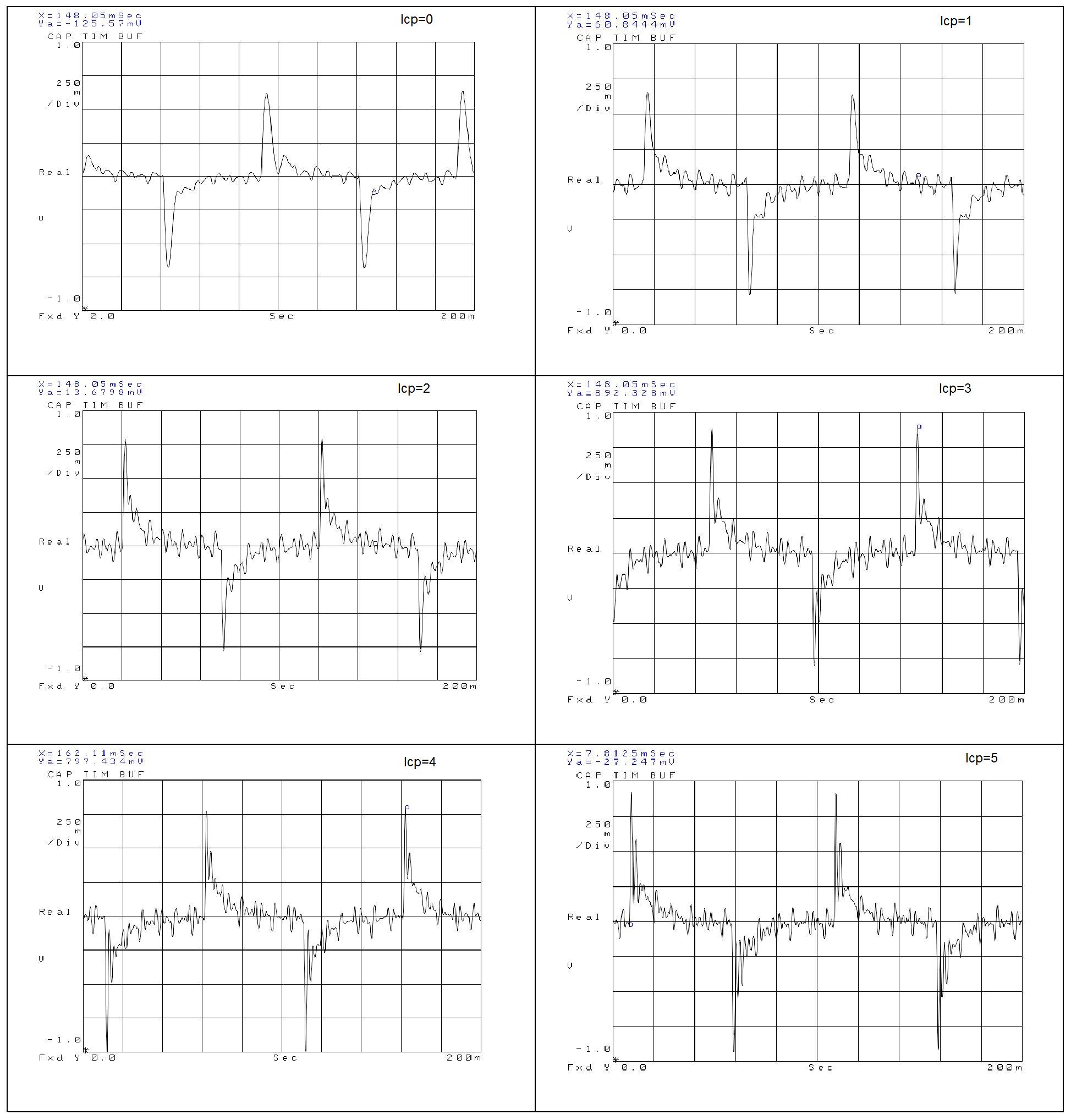

There will be signs of instability, and this is what this test reveals. So the frequency was set again to 2.2221 GHz, and the charge pump current Icp increase step by step, from 0 to 5. At 6 and 7, no phase lock could be achieve – fully unstable loop.

Step response (AC component only, square wave, 10 Hz at nominal 100 mV, supplied to test port)

Icp=0 – this is the most stable condition, phase margin is about 20 degrees. Already at Icp=1, phase margin of about 3 degrees, stability is much compromised/considerably more noise, not only for the step response, but also during the steady portions. At Icp=2 and above, phase margin is negative, still, phase lock is robust (will not re-lock, once lock is lost), and the pulse response suggests to stay away from such regions.